What Makes the Join() Method Blazingly Faster Than Iteration?

A reminder to always prefer specific methods over a generalized approach.

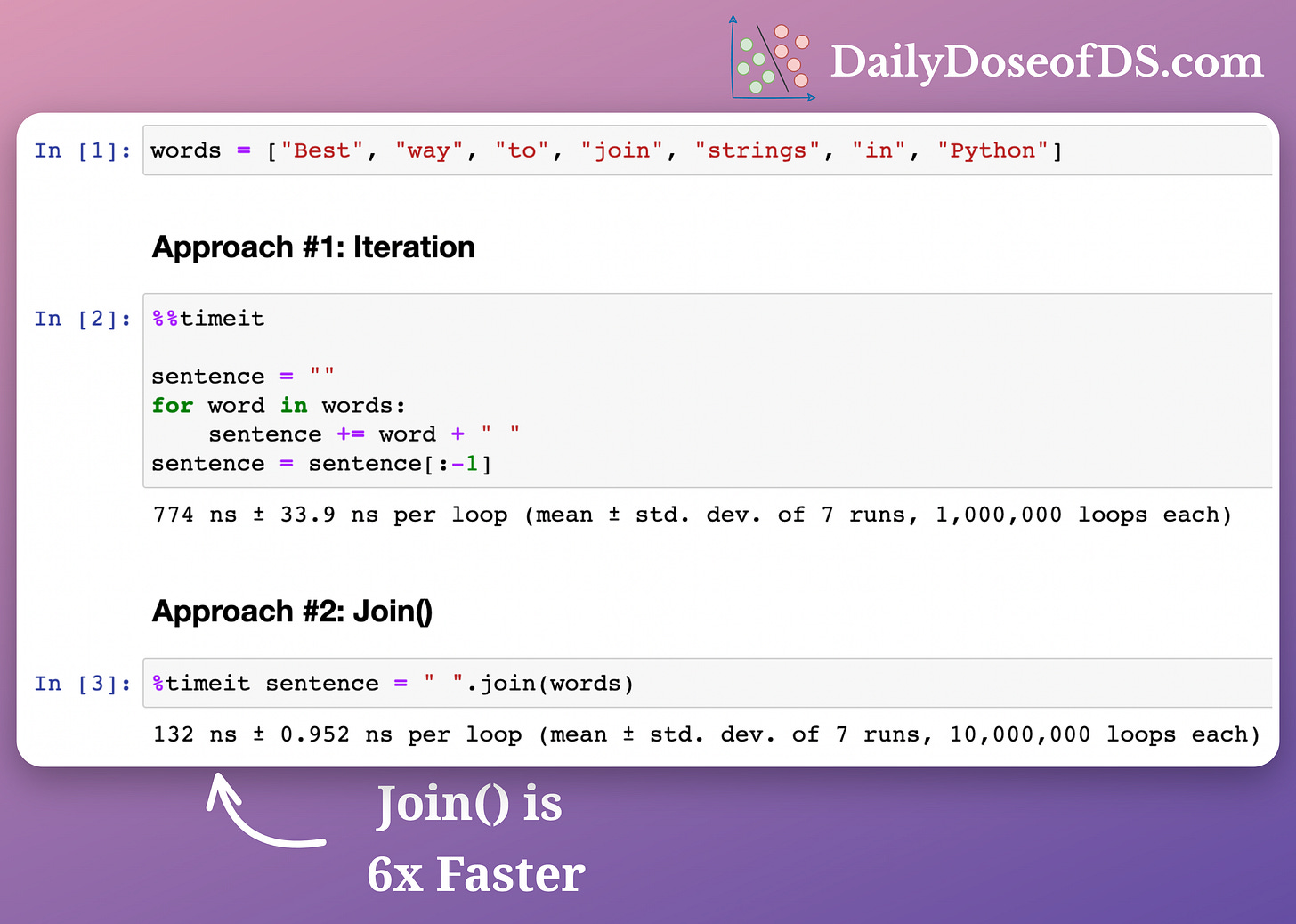

There are two popular ways to concatenate multiple strings:

Iterating and appending them to a single string.

Using Python’s in-built

join()method.

But as shown below, the 2nd approach is significantly faster than the 1st approach:

Can you answer why?

No, the answer is not vectorization!

Continue reading to learn more.

When concatenating using iteration, Python naively executes the instructions it comes across.

Thus, it does not know (beforehand):

number of strings it will concatenate

number of white spaces it will need

Simply put, iteration inhibits any scope for optimization.

As a result, during every iteration, Python asks for a memory allocation of:

the string at the current iteration

the white space added as a separator

This leads to repeated calls to memory.

To be precise, the number of calls in this case is two times the size of the list.

But this is not the case when we use join().

Because in that case, Python precisely knows (beforehand):

number of strings it will be concatenating

number of white spaces it will need

All these are applied for allocation in a single call and are available upfront before concatenation.

To summarize:

with iteration, the number of memory allocation calls is 2x the list's size.

with

join(), the number of memory allocation calls is just one.

This explains the significant difference in their run-time we noticed earlier.

This post is also a reminder to ALWAYS prefer specific methods over a generalized approach.

These subtle sources of optimization can lead to profound improvements in run-time and memory utilization of your code.

What do you think?

If you want to learn more about run-time and memory optimization, I have published 50+ issues so far on these topics:

Read them here:

👉 Over to you: What other ways do you commonly use to optimize native Python code?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights.

The button is located towards the bottom of this email.

Thanks for reading!

Latest full articles

If you’re not a full subscriber, here’s what you missed:

DBSCAN++: The Faster and Scalable Alternative to DBSCAN Clustering

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning

You Cannot Build Large Data Projects Until You Learn Data Version Control!

Why Bagging is So Ridiculously Effective At Variance Reduction?

Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit

Gaussian Mixture Models (GMMs): The Flexible Twin of KMeans.

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!

As always love your post!