Why Are We Typically Advised To Set Seeds for Random Generators?

A demonstration to show what happens if you don't.

From time to time, we advised to set seeds for random numbers before training an ML model. Here's why.

The weight initialization of a model is done randomly. Thus, any repeated experiment never generates the same set of numbers. This can hinder the reproducibility of your model.

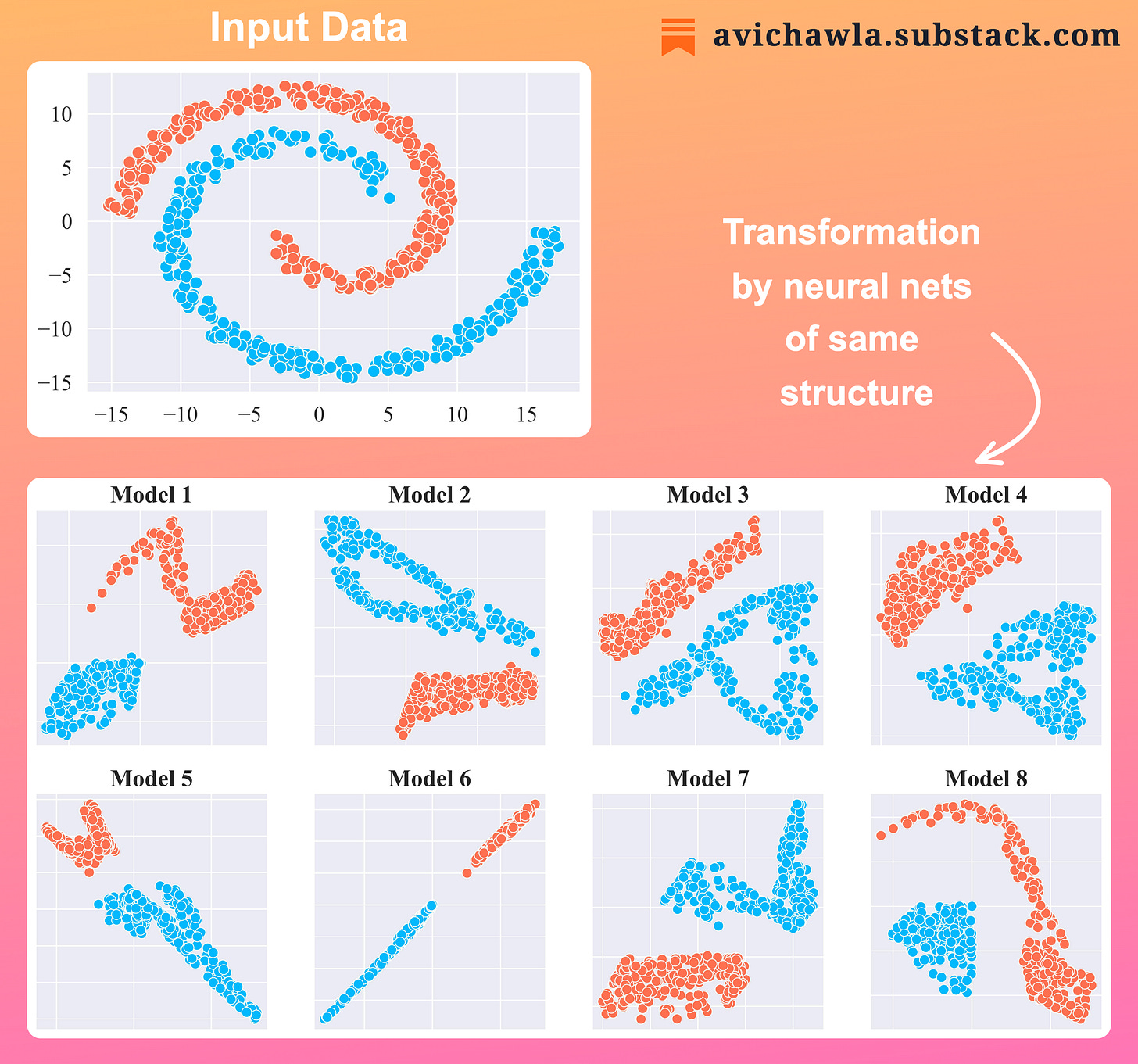

As shown above, the same input data gets transformed in many ways by different neural networks of the same structure.

Thus, before training any model, always ensure that you set seeds so that your experiment is reproducible later.

👉 See what others are saying about this post on LinkedIn: Post Link.

👉 If you liked this post, do leave a heart react 🤍.

👉 If you love reading this newsletter, feel free to share it with friends!

Good news!

Today, this publication has crossed 10,000 subscribers. I’m extremely grateful to all of you who take the time to read my posts daily.

Today, I wrote a post on LinkedIn where I mentioned many details about this whole journey and how this publication came about.

👉 You can check that out here: Post Link.

Thanks again for making this journey worth it ❤️.

Find the code for my tips here: GitHub.

I like to explore, experiment and write about data science concepts and tools. You can read my articles on Medium. Also, you can connect with me on LinkedIn.