Why You Should Avoid Appending Rows To A DataFrame

...and what happens if you do.

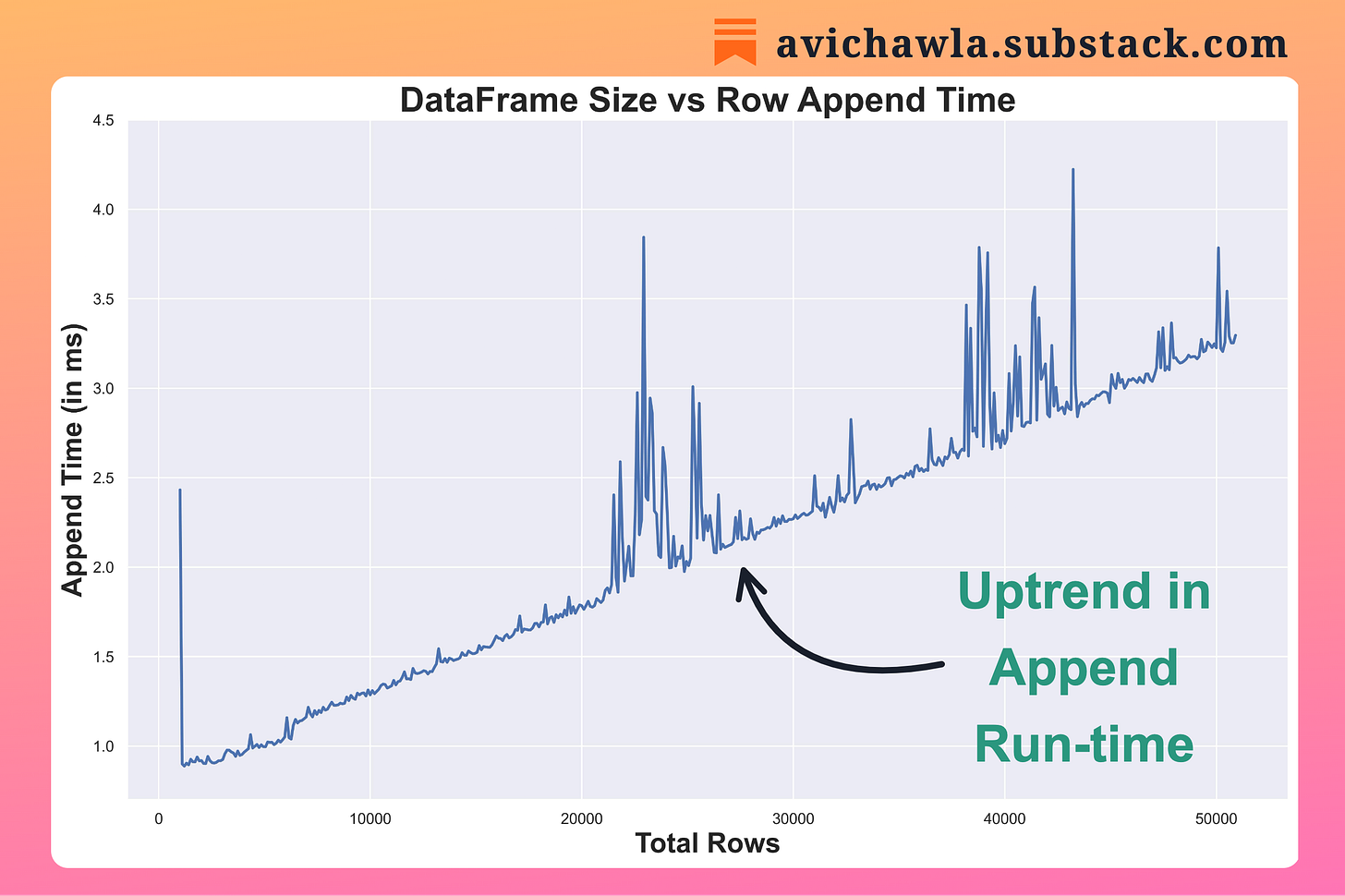

As we append more and more rows to a Pandas DataFrame, the append run-time keeps increasing. Here's why.

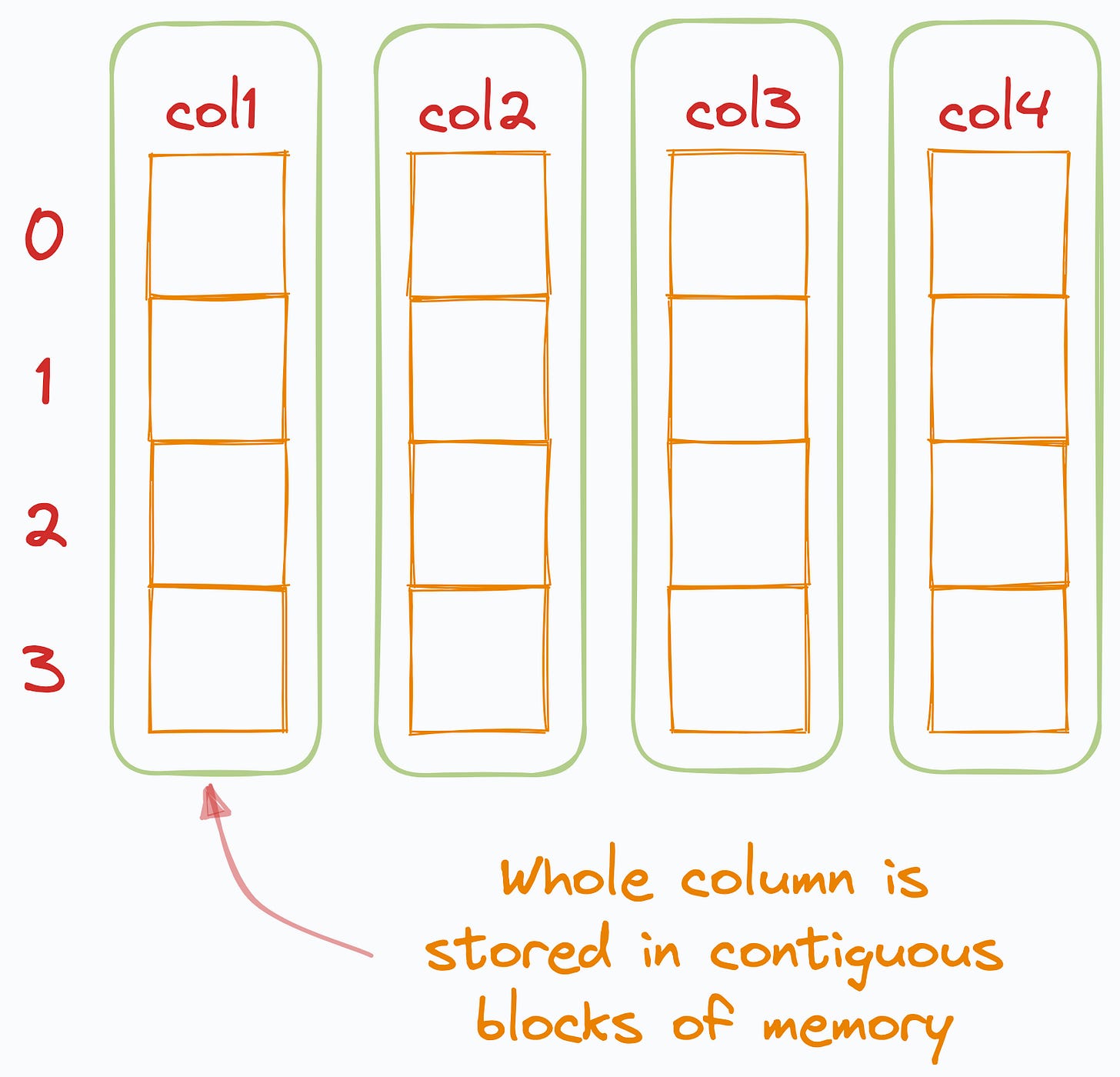

A DataFrame is a column-major data structure. Thus, consecutive elements in a column are stored next to each other in memory.

As new rows are added, Pandas always wants to preserve its column-major form.

But while adding new rows, there may not be enough space to accommodate them while also preserving the column-major structure.

In such a case, existing data is moved to a new memory location, where Pandas finds a contiguous block of memory.

Thus, as the size grows, memory reallocation gets more frequent, and the run time keeps increasing.

The reason for spikes in this graph may be because a column taking higher memory was moved to a new location at this point, thereby taking more time to reallocate, or many columns were shifted at once.

So what can we do to mitigate this?

The increase in run-time solely arises because Pandas is trying to maintain its column-major structure.

Thus, if you intend to grow a dataframe (row-wise) this frequently, it is better to first convert the dataframe to another data structure, a dictionary or a numpy array, for instance.

Carry out the append operations here, and when you are done, convert it back to a dataframe.

P.S. Adding new columns is not a problem. This is because this operation does not conflict with other columns.

What do you think could be some other ways to mitigate this? Let me know :)

👉 Tell me you liked this post by leaving a heart react 🤍.

👉 If you love reading this newsletter, feel free to share it with friends!

Find the code for my tips here: GitHub.

I like to explore, experiment and write about data science concepts and tools. You can read my articles on Medium. Also, you can connect with me on LinkedIn and Twitter.

👏👏👏