6 Elegant Jupyter Hacks

Jupyter is cool. Let's make it super cool.

Despite the widespread usage of Jupyter notebooks, many users do not use them to their full potential.

They tend to use Jupyter using its default interface/capabilities, which, in my opinion, can be largely improved to provide a richer experience.

Today, let me share some of the coolest things I have learned about Jupyter after using it for so many years.

Let’s begin!

#1) Retrieve a cell’s output in Jupyter

Many Jupyter users often forget to assign the results of a Jupyter cell to a variable.

So they have to (unwillingly) rerun the cell and assign it to a variable.

But very few know that IPython provides a dictionary Out, which you can use to retrieve a cell’s output.

Just specify the cell number as the dictionary’s key. This will return the corresponding output.

#2) Enrich the default preview of a DataFrame

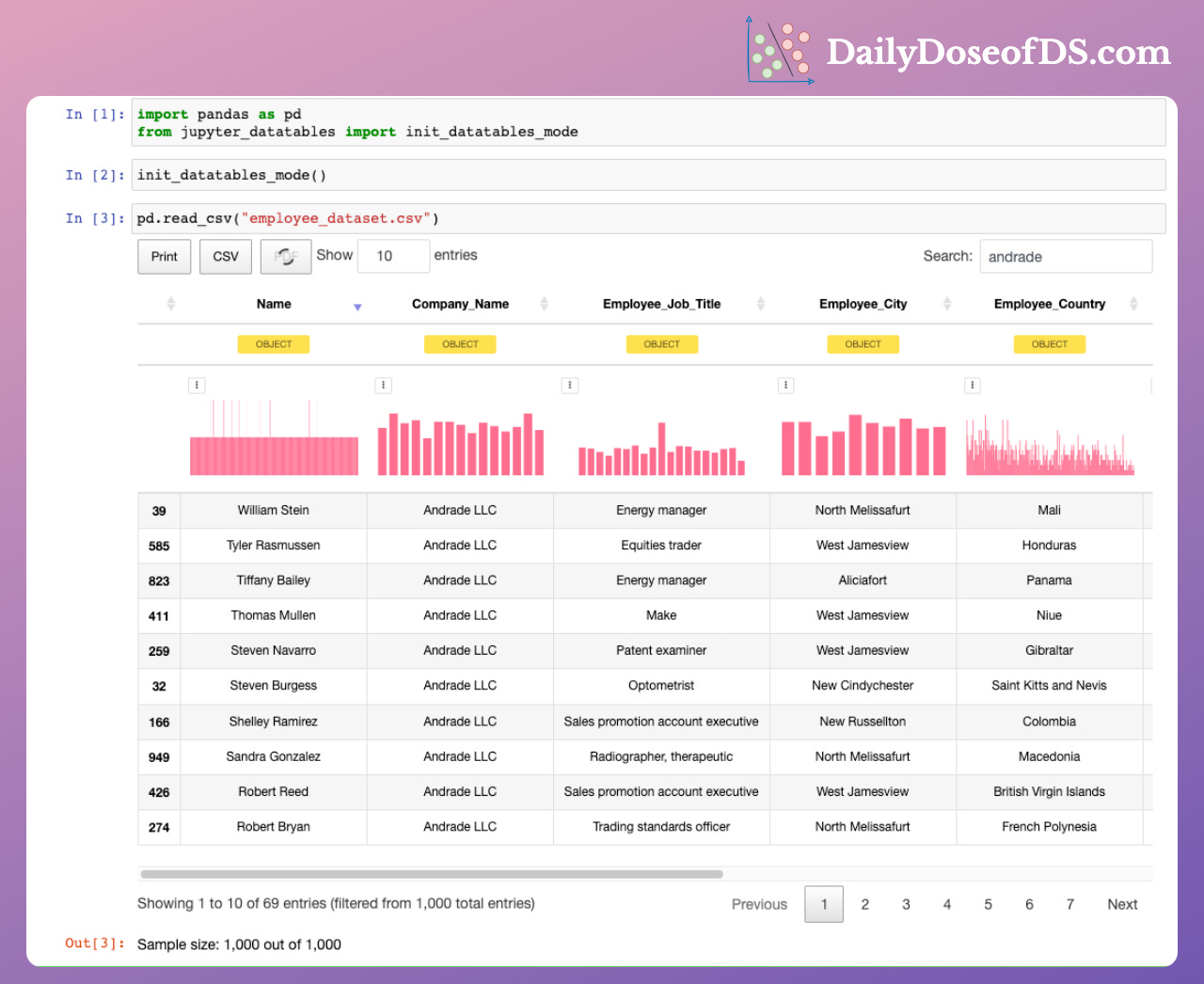

Often when we load a DataFrame in Jupyter, we preview it by printing, as shown below:

However, it hardly tells anything about what’s inside this data.

Instead, use Jupyter-DataTables.

It supercharges the default preview of a DataFrame with many useful features, as depicted above.

This richer preview provides sorting, filtering, exporting, and pagination operations along with column distribution and data types.

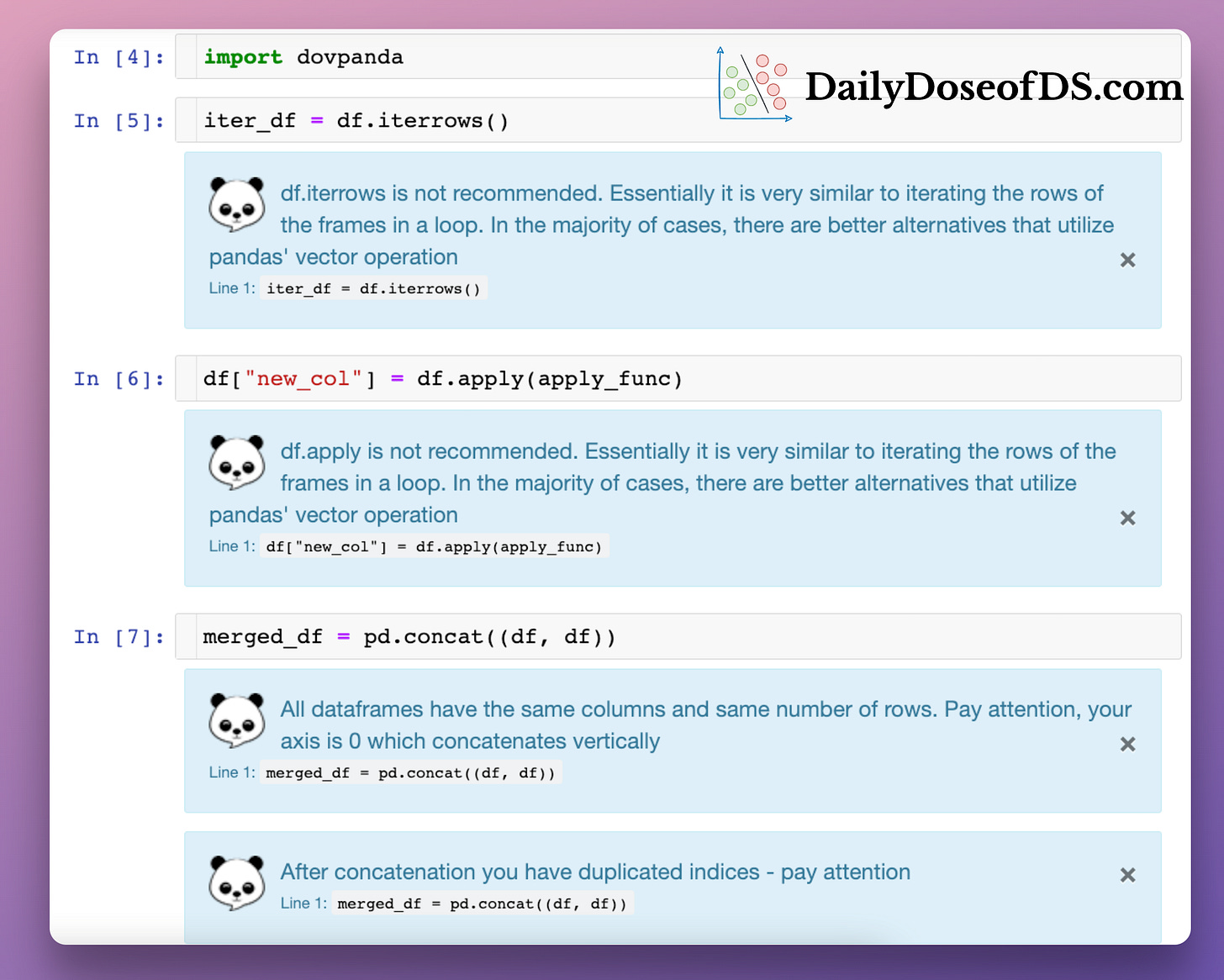

#3) Generate helpful hints as you write Pandas code

Pandas has many unoptimized methods.

They can significantly slow down data analysis if you use them.

Dovpanda is a pretty cool tool that gives suggestions/warnings about your data manipulation steps.

Whenever we use any unoptimized methods, it automatically prompts a warning and a suggestion.

#4) Improve rendering of DataFrames

In a recent issue on Sparklines, we learned that whenever we display a DataFrame in Jupyter, it is rendered using HTML and CSS.

This means that we can format its output just like web pages.

One thing that many Jupyter users do is that they preview raw DataFrames for data analysis tasks.

But unknown to them, styling can make data analysis much easier and faster, as depicted below:

The above styling provides so much clarity over a raw DataFrame.

To style Pandas DataFrames, use its Styling API (𝗱𝗳.𝘀𝘁𝘆𝗹𝗲). As a result, the DataFrame is rendered with the specified styling.

#5) Restart the Jupyter kernel without losing variables

While working in a Jupyter Notebook, you may want to restart the kernel due to several reasons.

If there are any active data/model objects, most users dump them to disk, restart the kernel, and then load them back.

But this is never needed.

Use the %store magic command.

It allows you to store and retrieve a variable back even if you restart the kernel.

This way, you can avoid the hassle of dumping an object to disk.

#6) Search code in all Jupyter Notebooks from the terminal

Context: I have published over 600 newsletter issues so far.

So my local directory is filled with Jupyter notebooks (392 as of today), which accompany the code for most of the issues published here.

Thus, if I ever wanted to refer to some code I wrote previously in a Jupyter notebook, it became tough to find that specific notebook.

This involved plenty of manual effort.

But later, I discovered an open-source tool — nbcommands.

Using this, we can search for code in Jupyter Notebook right from the terminal:

A task that often used to take me 5-10 minutes now takes me only a couple of seconds.

Pretty cool, isn’t it?

That’s it for today.

Hope you learned something new :)

👉 Over to you: What are some other cool Jupyter hacks that you are aware of?

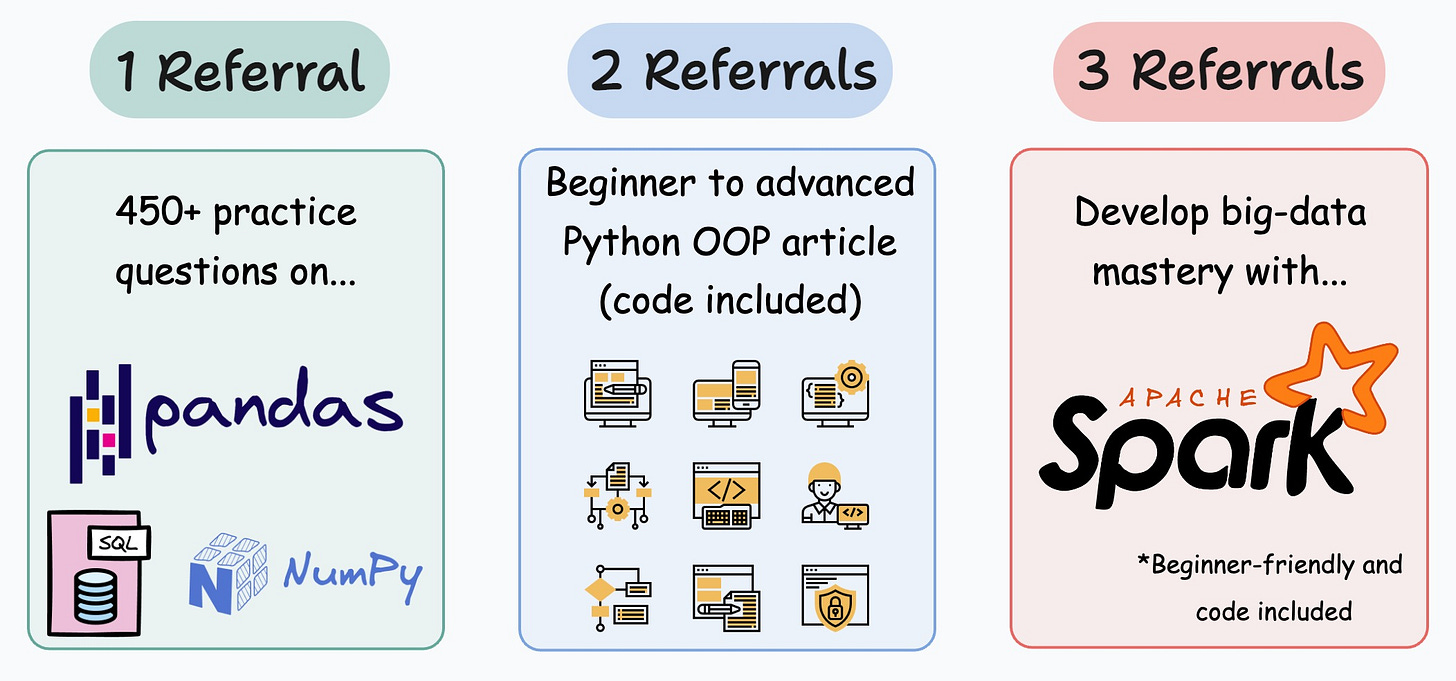

1 Referral: Unlock 450+ practice questions on NumPy, Pandas, and SQL.

2 Referrals: Get access to advanced Python OOP deep dive.

3 Referrals: Get access to the PySpark deep dive for big-data mastery.

Get your unique referral link:

Are you overwhelmed with the amount of information in ML/DS?

Every week, I publish no-fluff deep dives on topics that truly matter to your skills for ML/DS roles.

For instance:

A Beginner-friendly Introduction to Kolmogorov Arnold Networks (KANs).

5 Must-Know Ways to Test ML Models in Production (Implementation Included).

Understanding LoRA-derived Techniques for Optimal LLM Fine-tuning

8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science

Implementing Parallelized CUDA Programs From Scratch Using CUDA Programming

You Are Probably Building Inconsistent Classification Models Without Even Realizing.

How To (Immensely) Optimize Your Machine Learning Development and Operations with MLflow.

And many many more.

Join below to unlock all full articles:

SPONSOR US

Get your product in front of more than 76,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email.