7 Categorical Data Encoding Techniques

...summarized in a single frame.

Here are 7 ways to encode categorical features:

One-hot encoding:

Each category is represented by a binary vector of 0s and 1s.

Each category gets its own binary feature, and only one of them is "hot" (set to 1) at a time, indicating the presence of that category.

Number of features = Number of unique categorical labels

Dummy encoding:

Same as one-hot encoding but with one additional step.

After one-hot encoding, we drop a feature randomly.

This is done to avoid the dummy variable trap. Here’s why we do it: Dummy variable trap issue. Also, here are 8 more lesser-known pitfalls and cautionary measures that you will likely run into in your DS projects: 8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science.

Number of features = Number of unique categorical labels - 1.

Effect encoding:

Similar to dummy encoding but with one additional step.

Alter the row with all zeros to -1.

This ensures that the resulting binary features represent not only the presence or absence of specific categories but also the contrast between the reference category and the absence of any category.

Number of features = Number of unique categorical labels - 1.

Label encoding:

Assign each category a unique label.

Label encoding introduces an inherent ordering between categories, which may not be the case.

Number of features = 1.

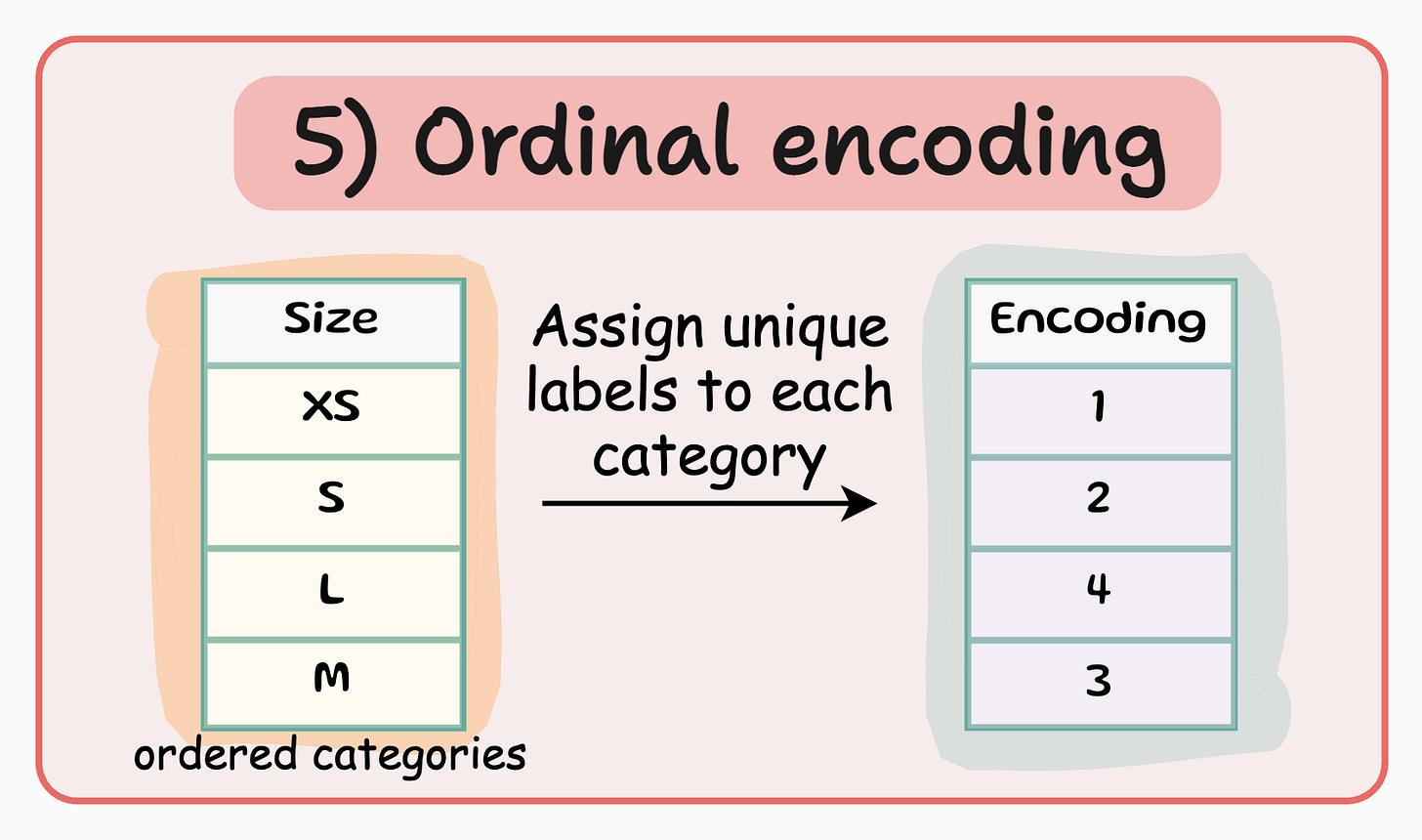

Ordinal encoding:

Similar to label encoding — assign a unique integer value to each category.

The assigned values have an inherent order, meaning that one category is considered greater or smaller than another.

Number of features = 1.

Count encoding:

Also known as frequency encoding.

Encodes categorical features based on the frequency of each category.

Thus, instead of replacing the categories with numerical values or binary representations, count encoding directly assigns each category with its corresponding count.

Number of features = 1.

Binary encoding:

Combination of one-hot encoding and ordinal encoding.

It represents categories as binary code.

Each category is first assigned an ordinal value, and then that value is converted to binary code.

The binary code is then split into separate binary features.

Useful when dealing with high-cardinality categorical features (or a high number of features) as it reduces the dimensionality compared to one-hot encoding.

Number of features = log(n) (in base 2).

While these are some of the most popular techniques, do note that these are not the only techniques for encoding categorical data.

You can try plenty of techniques with the category-encoders library: Category Encoders.

👉 Over to you: What other common categorical data encoding techniques have I missed?

1 Referral: Unlock 450+ practice questions on NumPy, Pandas, and SQL.

2 Referrals: Get access to advanced Python OOP deep dive.

3 Referrals: Get access to the PySpark deep dive for big-data mastery.

Get your unique referral link:

Are you overwhelmed with the amount of information in ML/DS?

Every week, I publish no-fluff deep dives on topics that truly matter to your skills for ML/DS roles.

For instance:

A Beginner-friendly Introduction to Kolmogorov Arnold Networks (KANs).

5 Must-Know Ways to Test ML Models in Production (Implementation Included).

Understanding LoRA-derived Techniques for Optimal LLM Fine-tuning

8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science

Implementing Parallelized CUDA Programs From Scratch Using CUDA Programming

You Are Probably Building Inconsistent Classification Models Without Even Realizing.

And many many more.

Join below to unlock all full articles:

SPONSOR US

Get your product in front of 80,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email to ensure your product reaches this influential audience.

Double-check the binary encoding, the green values should have the same binary labels