A Pivotal Moment in NLP Research Which Made Static Embeddings (Almost) Obsolete

Looking back to the pre-Transformer times.

Recently, I received an email from one of the readers of this newsletter.

They showed a pretty genuine concern about being new to the field of AI and being intimidated by stuff like GPT/Transformers, etc.

And I think the problem is quite common because so many people are new to this field, and they have very little clue about what’s happening today.

And I think the first step in addressing this concern is understanding how we got where we are today — the foundational stuff.

So in today’s newsletter issue, I want to step aside from the usual data science-oriented content.

Instead, I want to help you look a decade back into NLP research, understand the pain points that existed back then, how they were addressed, and share some learnings from my research in this domain.

Today, we are discussing embeddings but in any upcoming issue, we shall discusse the architectural revolutions in NLP.

Let’s begin!

To build models for language-oriented tasks, it is crucial to generate numerical representations (or vectors) for words.

This allows words to be processed and manipulated mathematically and perform various computational operations on words.

The objective of embeddings is to capture semantic and syntactic relationships between words. This helps machines understand and reason about language more effectively.

In the pre-Transformers era, this was primarily done using pre-trained static embeddings.

Essentially, someone would train embeddings on, say, 100k, or 200k common words using deep learning techniques and open-source them.

Consequently, other researchers would utilize those embeddings in their projects.

The most popular models at that time (around 2013-2017) were:

Glove

Word2Vec

FastText, etc.

These embeddings genuinely showed some promising results in learning the relationships between words.

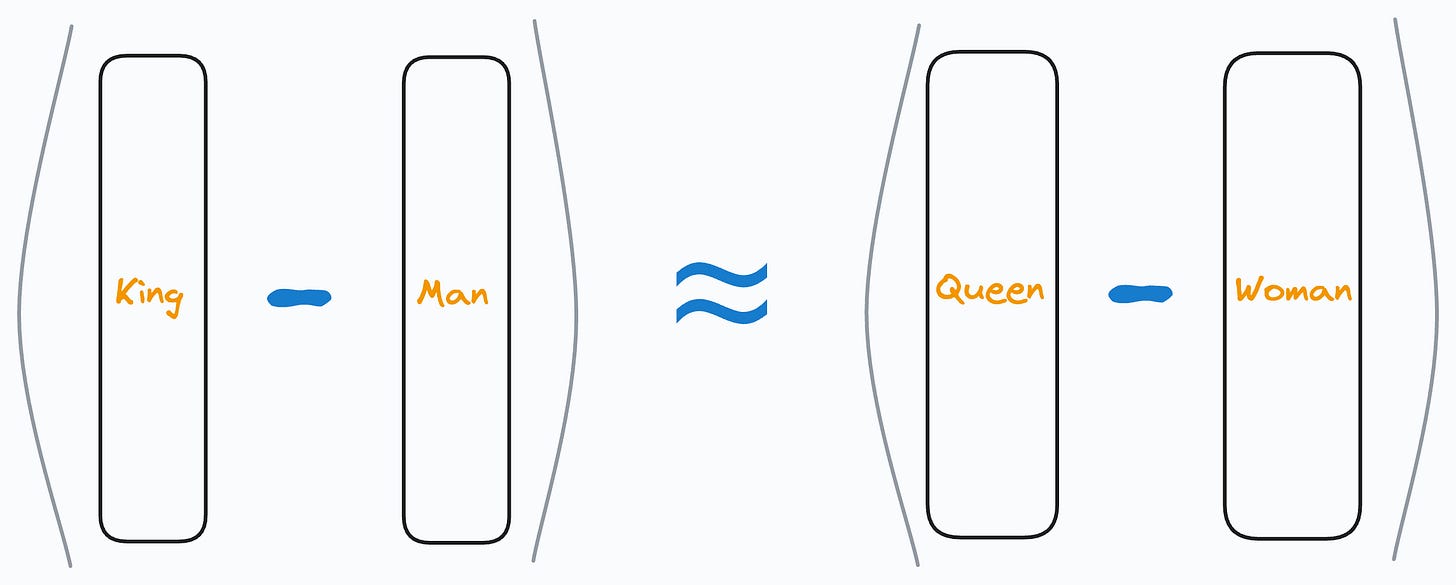

For instance, at that time, an experiment showed that the vector operation (King - Man) + Woman returned a vector near the word “Queen”.

That’s pretty interesting, isn’t it?

In fact, the following relationships were also found to be true:

Paris - France + Italy≈RomeSummer - Hot + Cold≈WinterActor - Man + Woman≈Actressand more.

So while these embeddings did capture relative representations of words, there was a major limitation.

Consider the following two sentences:

Convert this data into a table in Excel.

Put this bottle on the table.

Here, the word “table” conveys two entirely different meanings:

The first sentence refers to a “data” specific sense of the word “table”.

The second sentence refers to a “furniture” specific sense of the word “table”.

Yet, static embedding models assigned them the same representation.

Thus, these embeddings didn’t consider that a word may have different usages in different contexts.

But this was addressed in the Transformer era, which resulted in contextualized embeddings models powered by Transformers, such as:

BERT: A language model trained using two techniques:

Masked Language Modeling (MLM): Predict a missing word in the sentence, given the surrounding words.

Next Sentence Prediction (NSP).

DistilBERT: A simple, effective, and lighter version of BERT which is around 40% smaller:

Utilizes a common machine learning strategy called student-teacher theory.

Here, the student is the distilled version of BERT, and the teacher is the original BERT model.

The student model is supposed to replicate the teacher model’s behavior.

If you want to learn how this is implemented practically, we discussed it here: Model Compression: A Critical Step Towards Efficient Machine Learning.

ALBERT: A Lite BERT (ALBERT). Uses a couple of optimization strategies to reduce the size of BERT:

Eliminates one-hot embeddings at the initial layer by projecting the words into a low-dimensional space.

Shares the weights across all the network segments of the Transformer model.

These models were capable of generating context-aware representations, thanks to their self-attention mechanism.

This would allow embedding models to dynamically generate embeddings for a word based on the context they were used in.

As a result, if a word would appear in a different context, the model would get a different representation.

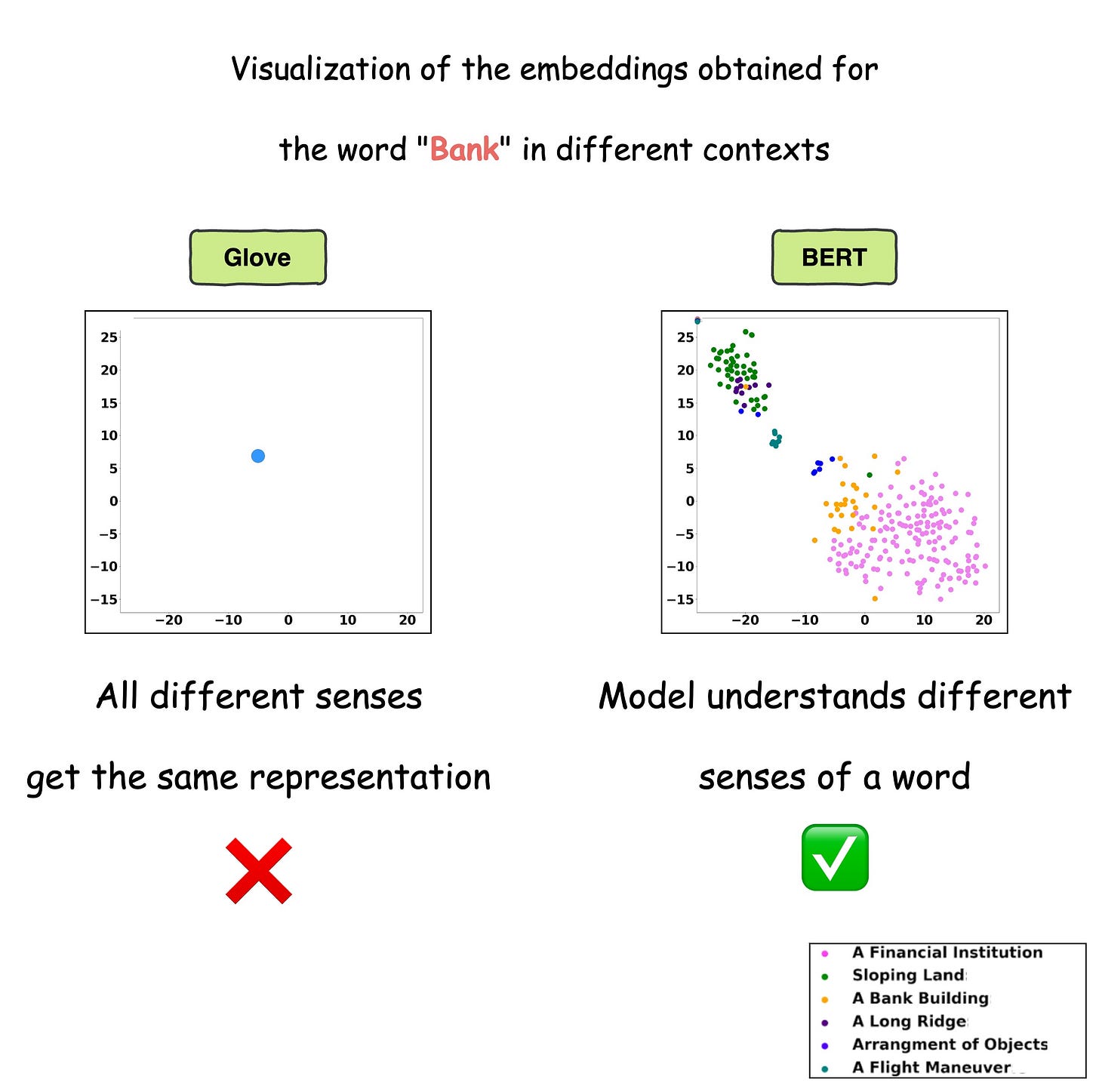

This is precisely depicted in the image below for different uses of the word “Bank”.

For visualization purposes, the embeddings have been projected into 2d space using t-SNE.

As depicted above, the static embedding models — Glove and Word2Vec produce the same embedding for different usages of a word.

However, contextualized embedding models don’t.

In fact, contextualized embeddings understand the different meanings/senses of the word “Bank”:

A financial institution

Sloping land

A Long Ridge, and more.

As a result, they addressed the major limitations of static embedding models.

For those who wish to learn in more detail, I have published a couple of research papers on this topic:

Interpretable Word Sense Disambiguation with Contextualized Embeddings.

A Comparative Study of Transformers on Word Sense Disambiguation.

These papers discuss the strengths and limitations of many contextualized embedding models in detail.

👉 Over to you: What do you think were some other pivotal moments in NLP research?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights.

The button is located towards the bottom of this email.

Thanks for reading!

Latest full articles

If you’re not a full subscriber, here’s what you missed last month:

DBSCAN++: The Faster and Scalable Alternative to DBSCAN Clustering

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning

You Cannot Build Large Data Projects Until You Learn Data Version Control!

Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit

Gaussian Mixture Models (GMMs): The Flexible Twin of KMeans.

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!

Thanks for the nice article!

This is such a greay article! Thank you so much for putting it together!