DropBlock vs. Dropout for Regularizing CNNs

Addressing a limitation of Dropout when used in CNNs.

Speech-to-text at unmatched accuracy with AssemblyAI

AssemblyAI has made it much easier to distinguish speakers and determine what they spoke in a conversation, resulting in:

13% more accurate transcript than previous versions.

85.4% reduction in speaker count errors.

5 new languages (total 16 supported languages).

A demo is shown below:

Import the package, set the API key, and transcribe the file while setting

speaker_labelsparameter toTrue:

Print the speaker labels:

AssemblyAI’s speech-to-text models rank top across all major industry benchmarks. You can transcribe 1 hour of audio in ~35 seconds at an industry-leading accuracy of 92.5% (for English).

Sign up today and get $50 in free credits!

Thanks to AssemblyAI for sponsoring today’s issue.

DropBlock vs. Dropout for Regularizing CNNs

In yesterday’s post, I shared CNN Explainer — an interactive tool to visually understand CNNs and their internal working.

Today, we shall continue our discussion on CNNs and understand an overlooked issue while regularizing these networks using Dropout.

Let’s begin!

Background

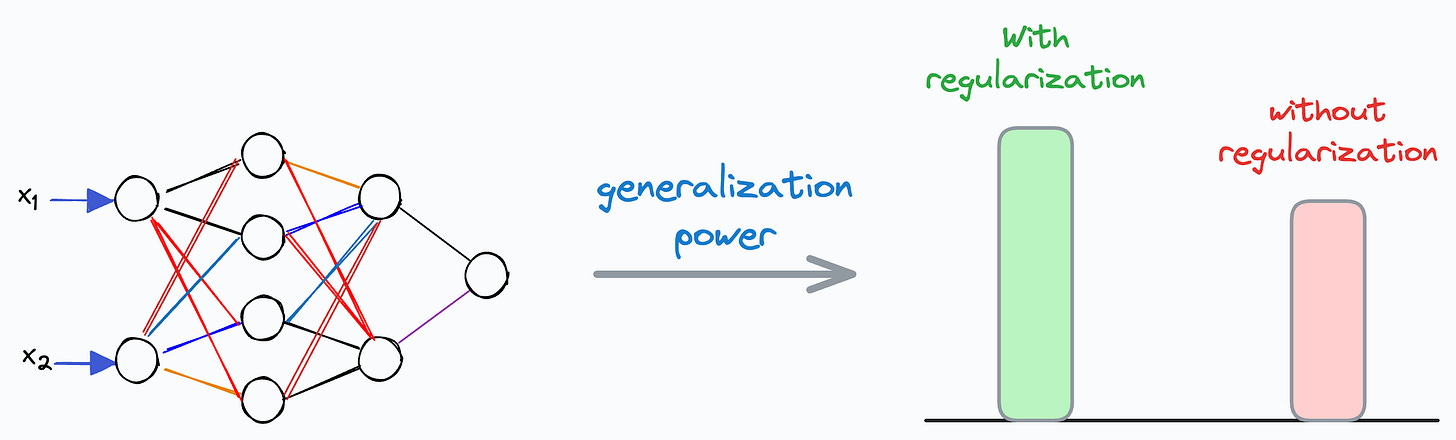

When it comes to training neural networks, it is always recommended to use Dropout (and other regularization techniques) to improve its generalization power.

This applies not just to CNNs but to all other neural networks.

And I am sure you already know the above details, so let’s get into the interesting part.

The problem with using Dropout in CNNs

The core operation that makes CNNs so powerful is convolution, which allows them to capture local patterns, such as edges and textures, and helps extract relevant information from the input.

The animation below depicts how the convolution operation works.

From a purely mathematical perspective, we slide a filter (shown in yellow below) over the input (shown in green below) and take the element-wise sum between the filter and the overlapped input to get the convolution output:

Here, if we were to apply the traditional Dropout, the input features would look something like this:

In fully connected layers, we zero out neurons. In CNNs, however, we randomly zero out the pixel values before convolution, as depicted above.

But this isn’t found to be that effective specifically for convolution layers.

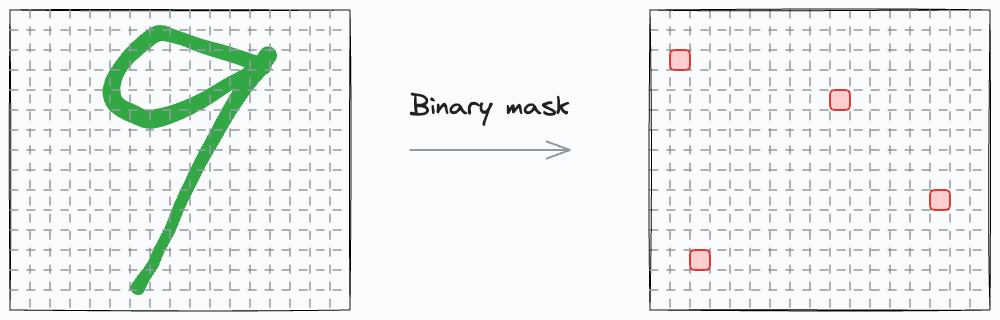

To understand this, consider we have some image data. In every image, we would find that nearby features (or pixels) are highly correlated spatially.

For instance, imagine zooming in on the pixel level of the digit ‘9’.

Here, we would notice that the red pixel (or feature) is highly correlated with other features in its vicinity:

Thus, dropping the red feature using Dropout will likely have no effect and its information can still be sent to the next layer.

Simply put, the nature of the convolution operation defeats the entire purpose of the traditional Dropout procedure.

The solution

DropBlock is a much better, effective, and intuitive way to regularize CNNs.

The core idea in DropBlock is to drop a contiguous region of features (or pixels) rather than individual pixels.

This is depicted below:

Similar to Dropout in fully connected layers, wherein the network tries to generate more robust ways to fit the data in the absence of some activations, in the case of DropBlock, the convolution layers get more robust to fit the data despite the absence of a block of features.

Moreover, the idea of DropBlock also makes intuitive sense — if a contiguous region of a feature is dropped, the problem of using Dropout with convolution operation can be avoided.

DropBlock parameters

DropBlock has two main parameters:

Block_size: The size of the box to be dropped.Drop_rate: The drop probability of the central pixel.

To apply DropBlock, first, we create a binary mask on the input sampled from the Bernoulli distribution:

Next, we create a block of size block_size*block_size which has the sampled pixels at the center:

Done!

The efficacy of DropBlock over Dropout is evident from the results table below:

On the ImageNet classification dataset:

DropBlock provides a 1.33% gain over Dropout.

DropBlock with Label smoothing provides a 1.55% gain over Dropout.

We covered label smoothing in this newsletter issue: Regularize Neural Networks Using Label Smoothing.

Thankfully, DropBlock is also integrated with PyTorch.

There’s also a library for DropBlock, called “dropblock,” which also provides the linear scheduler for drop_rate.

So the thing is that the researchers who proposed DropBlock found the technique to be more effective when the drop_rate was increased gradually.

The DropBlock library implements the scheduler. But of course, there are ways to do this in PyTorch as well. So it’s entirely up to you which implementation you want to use

Further reading:

We covered 8 fatal (yet non-obvious) pitfalls and cautionary measures in data science here.

We discussed 11 uncommon powerful techniques to supercharge your ML models here.

Isn’t that a simple, intuitive, and better regularization technique for CNNs?

👉 Over to you: What are some other ways to regularize CNNs specifically?

Are you overwhelmed with the amount of information in ML/DS?

Every week, I publish no-fluff deep dives on topics that truly matter to your skills for ML/DS roles.

For instance:

Conformal Predictions: Build Confidence in Your ML Model’s Predictions

Quantization: Optimize ML Models to Run Them on Tiny Hardware

5 Must-Know Ways to Test ML Models in Production (Implementation Included)

8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science

Implementing Parallelized CUDA Programs From Scratch Using CUDA Programming

You Are Probably Building Inconsistent Classification Models Without Even Realizing

And many many more.

Join below to unlock all full articles:

SPONSOR US

Get your product in front of 85,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email to ensure your product reaches this influential audience.