Probability vs. Likelihood

Here's the key difference.

In data science and statistics, many folks often use “probability” and “likelihood” interchangeably.

However, likelihood and probability DO NOT convey the same meaning.

And the misunderstanding is somewhat understandable, given that they carry similar meanings in our regular language.

While writing today’s newsletter, I searched for their meaning in the Cambridge Dictionary.

Here’s what it says:

Probability: the level of possibility of something happening or being true.

Likelihood: the chance that something will happen.

If you notice closely, “likelihood” is the only synonym of “probability”.

Anyway.

In my opinion, it is crucial to understand that probability and likelihood convey very different meanings in data science and statistics.

Let’s understand!

Probability is used in contexts where you wish to know the possibility/odds of an event.

For instance, what is the:

Probability of obtaining an even number in a die roll?

Probability of drawing an ace of diamonds from a deck?

and so on…

When translated to ML, probability can be thought of as:

What is the probability that a transaction is fraud?

What is the probability that an image depicts a cat?

and so on…

Essentially, many classification models, like logistic regression or a classification neural network, etc., assign the probability of a specific label to an input.

When calculating probability, the model’s parameters are known.

Also, we assume that they are trustworthy.

For instance, to determine the probability of a head in a coin toss, we mostly assume and trust that it is a fair coin.

Likelihood, on the other hand, is about explaining events that have already occurred.

Unlike probability (where parameters are known and assumed to be trustworthy)...

…likelihood helps us determine if we can trust the parameters in a model based on the observed data.

Let me elaborate more on that.

Assume you have collected some 2D data and wish to fit a straight line with two parameters — slope (m) and intercept (c).

Here, likelihood is defined as the support provided by a data point for some particular parameter values in your model.

Here, you will ask questions like:

If I model this data with the parameters:

m=2andc=1, what is the likelihood of observing the data?m=3andc=2, what is the likelihood of observing the data?and so on…

The above formulation popularly translates into the maximum likelihood estimation (MLE), which we discussed in this newsletter here.

In maximum likelihood estimation, you have some observed data and you are trying to determine the specific set of parameters (θ) that maximize the likelihood of observing the data.

Using the term “likelihood” is like:

I have a possible explanation for my data. (In the above illustration, “explanation” can be thought of as the parameters you are trying to determine)

How well does my explanation explain what I’ve already observed? This is precisely quantified with likelihood.

For instance:

Observation: The outcomes of 10 coin tosses are “HHHHHHHTHH”.

Explanation: I think it is a fair coin (p=0.5).

What is the likelihood that my above explanation is true based on the observed data?

If you want to get into a more practical utility of likelihood, we covered it in:

To summarize…

It is immensely important to understand that in data science and statistics, likelihood and probability DO NOT convey the same meaning.

As explained above, they are pretty different.

In probability:

We determine the possibility of an event.

We know the parameters associated with the event and assume them to be trustworthy.

In likelihood:

We have some observations.

We have an explanation (or parameters).

Likelihood helps us quantify whether the explanation is trustworthy.

Hope that helped!

👉 Over to you: I would love to hear your explanation of probability and likelihood. Feel free to share :)

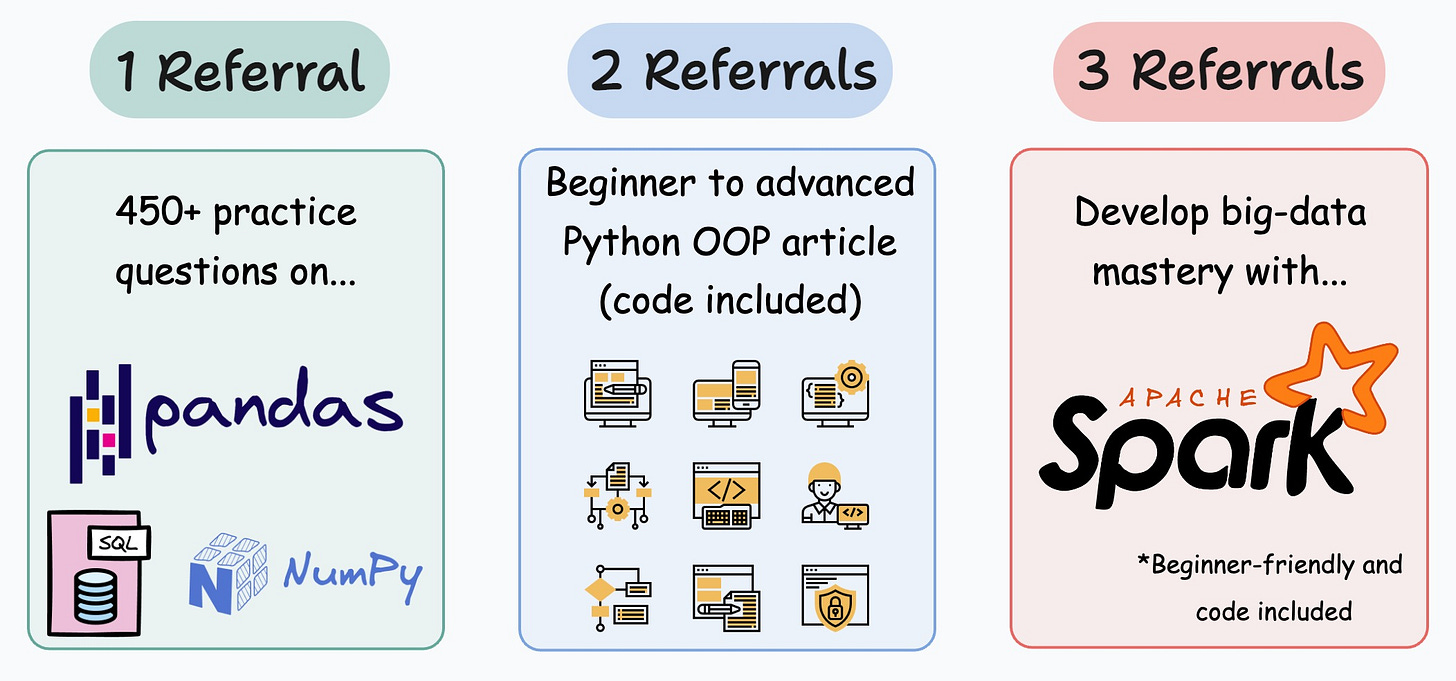

1 Referral: Unlock 450+ practice questions on NumPy, Pandas, and SQL.

2 Referrals: Get access to advanced Python OOP deep dive.

3 Referrals: Get access to the PySpark deep dive for big-data mastery.

Get your unique referral link:

Are you preparing for ML/DS interviews or want to upskill at your current job?

Every week, I publish in-depth ML deep dives. The topics align with the practical skills that typical ML/DS roles demand.

Join below to unlock all full articles:

Here are some of the top articles:

[FREE] A Beginner-friendly and Comprehensive Deep Dive on Vector Databases.

Implementing Parallelized CUDA Programs From Scratch Using CUDA Programming

Understanding LoRA-derived Techniques for Optimal LLM Fine-tuning

8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science.

5 Must-Know Ways to Test ML Models in Production (Implementation Included).

Don’t Stop at Pandas and Sklearn! Get Started with Spark DataFrames and Big Data ML using PySpark.

Join below to unlock all full articles:

👉 If you love reading this newsletter, share it with friends!

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

lovely

Thanks Avi! Nice explanation. Basically, if I am not mistaken -

- "Inference" is an example of Probability

- "Hypothesis Test" is an example of Likelihood.

Correct?