This GPU Accelerated tSNE Can Run Upto 700x Faster Than Sklearn

Addressing the limitations of Sklearn

Sklearn is the go-to library for all sorts of traditional machine learning (ML) tasks.

But one thing that I particularly dislike about Sklearn is that its implementations are primarily driven by NumPy, which ALWAYS runs on a single core of a CPU.

Another major limitation is that scikit-learn models cannot run on GPUs.

But from the discussion so far, it is pretty clear that this limitation provides massive room for run-time improvement.

The same applies to the tSNE algorithm, which is among the most powerful dimensionality reduction techniques to visualize high-dimensional datasets.

Because the biggest issue with tSNE (which we also discussed here) is that its run-time is quadratically related to the number of data points.

Thus, beyond, say, 10k-12k data points, it becomes pretty difficult to use tSNE from Sklearn implementations.

There are two ways to handle this:

Either keep waiting.

Or use optimized implementations that could be possibly accelerated with GPU.

Recently, I was stuck due to the same issue in one of my projects, and I found a pretty handy solution that I want to share with you today.

tSNE-CUDA is an optimized CUDA version of the tSNE algorithm, which, as the name suggests, can leverage hardware accelerators.

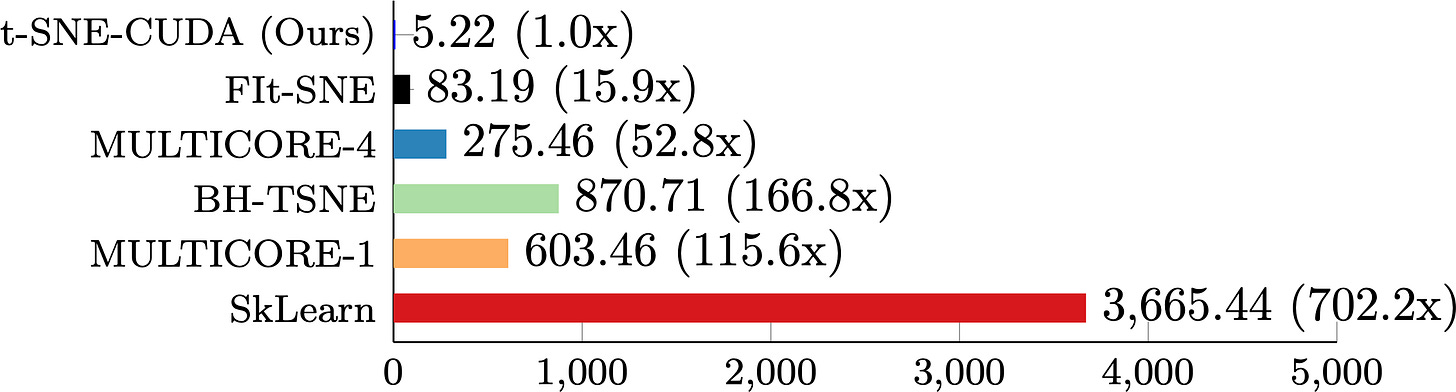

As a result, it provides immense speedups over the standard Sklearn implementation, which is evident from the image below:

As depicted above, the GPU-accelerated implementation:

Is 33 times faster than the Sklearn implementation.

Produces similar quality clustering as the Sklearn implementation.

Do note that this implementation only supports n_components=2, i.e., you can only project to two dimensions.

As per the docs, the authors have no plans to support more dimensions, as this will require significant changes to the code.

But in my opinion, that doesn’t matter because, for more than 99% of cases, tSNE is used to obtain 2D projections. So we are good here.

Before I conclude, I also found the following benchmarking results by the authors:

It depicts that on the CIFAR-10 training set (50k images), tSNE-CUDA is 700x Faster than Sklearn, which is an insane speedup.

Isn’t that cool?

I prepared this Colab notebook for you to get started: tSNE-CUDA Colab Notebook.

Further reading:

While this was just about tSNE, do you know we can accelerate other ML algorithms with GPUs? Read this article to learn more: Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Also, do you know how tSNE works end-to-end? Read this article to learn more: Formulating and Implementing the t-SNE Algorithm From Scratch

👉 Over to you: What are some other ways to boost the tSNE algorithm?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights.

The button is located towards the bottom of this email.

Thanks for reading!

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!

Hi Avi,

I am new to Data Science, I liked your Daily Dose Idea... Language is simple and too the point.

All words in this post is new to me... I come from JavaScript Development world.

Can you suggest some order of learning for me (or developers in general).

Does this fall under RAPIDS too??