What's Inside Python 3.13?

Some major and must-know updates to Python.

Earlier this week, I talked about GIL in Python.

To recap, GIL (global interpreter lock) restricts a process from running more than ONE thread at a time, as depicted below:

In other words, while a process can have multiple threads, ONLY ONE thread can run at a given time.

Python 3.13 allows us to disable GIL, which means a process can fully utilize all CPU cores.

Along with that, there are several other updates that I want to share today.

Let’s begin!

Improved interactive interpreter

To begin, consider we have some multiline code in the interpreter:

Say you want to define x = i**2 instead. Before Python 3.13, one must do this by revisiting the entire code line-by-line:

But Python 3.13 supports multiline editing—press the up arrow key once, and we get the entire code back:

One thing you may have noticed is the color difference in “>>>” and “…”

This color difference is also seen in the error messages:

Moving on, just like the shell has the clear command, Python 3.13 has too:

The next update within REPL is also cool (and something I had always felt was missing).

Notice that pressing enter after writing a function definition or any other indentation block does not self-indent the next line:

Python 3.13 does that automatically:

Lastly, the REPL now also supports quit and help commands. Earlier, one had to invoke them as functions—quit() and help().

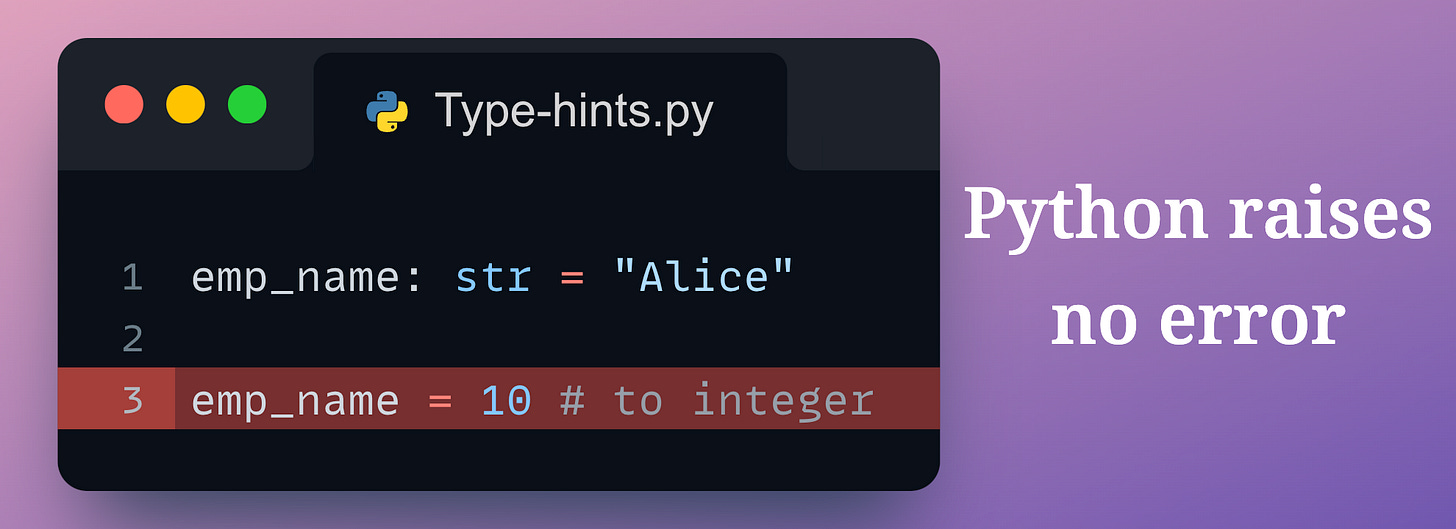

Type hints and annotations

Although Python never enforces type hints, they are incredibly valuable for improving code quality and maintainability and letting other users know the expected data types.

Here are some additions I found pretty useful:

1) ReadOnly

To define attributes of a TypedDict read-only, you can use the ReadOnly type. This is demonstrated below:

student_idis read-only. Thus, updating it prompts the static type checker (although the code will not raise any error when executed).

Do note that ReadOnly is only supported within a TypedDict.

2) Annotation for marking deprecations

If some part of your code (functions, etc.) will be deprecated, it is advised to mark them as deprecated.

Python 3.13 provides a @warings.deprecated decorator to mark objects as deprecated along with a message:

Note: There’s one more update—

TypeIs., which is used for type narrowing. I feel this deserves another issue to explain why this is special so I will cover that pretty soon.

That said, if you are new to type hints, we have already covered them below in this newsletter:

Disable GIL

As discussed earlier and in the following issue, Python 3.13 allows us to disable GIL.

Let me show you the performance improvement using a simple demo.

First, we start with some imports and define a long function:

Next, we have some single-threaded and multi-threaded code:

Now, if we run this with Python 3.13, we get:

The run-time is the same because GIL is still enabled here.

The thing is that in Python 3.13, a new executable called python3.13t was introduced as part of the experimental free-threaded mode. So python3.13t executable is a special version of Python that runs without the GIL.

Thus, we need to use Python 3.13t instead:

This time, we got faster performance.

I am testing this much more extensively right now, and I will share that with you in the coming week.

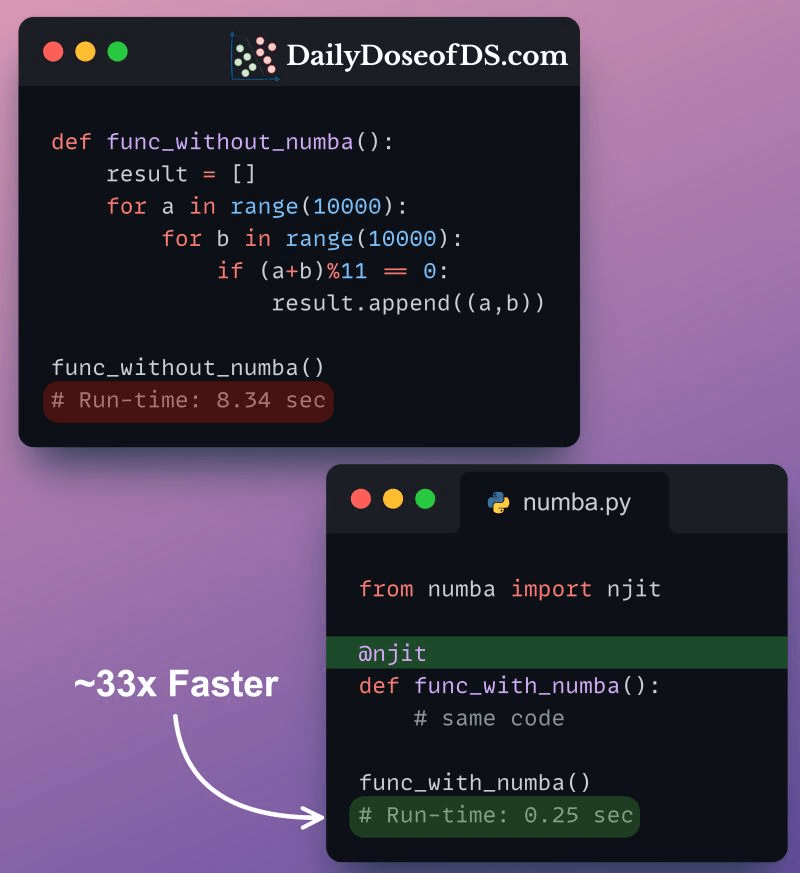

JIT

Python’s default interpreter — CPython is a standard interpreter for Python and offers little built-in optimization.

This profoundly affects the run-time performance of the program, especially when it’s all native Python code.

One solution is to use a Just-In-Time (JIT) compiler, which takes your existing Python code and generates a fast machine code (at run-time). Thus, post compilation, your code runs at native machine code speed.

JIT isn’t anything new, of course. There are external tools, like Numba, which can do this:

However, JIT is now built into Python 3.13.

That said, it is still in a preliminary and experimental phase, which will be improved in the upcoming versions.

While there are many more upgrades, I think the ones covered above are some of the most relevant.

👉 Over to you: Did I miss something important?

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

Learn sophisticated graph architectures and how to train them on graph data: A Crash Course on Graph Neural Networks – Part 1

Learn techniques to run large models on small devices: Quantization: Optimize ML Models to Run Them on Tiny Hardware

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Learn how to identify causal relationships and answer business questions: A Crash Course on Causality – Part 1

Learn how to scale ML model training: A Practical Guide to Scaling ML Model Training.

Learn techniques to reliably roll out new models in production: 5 Must-Know Ways to Test ML Models in Production (Implementation Included)

Learn how to build privacy-first ML systems: Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

Learn how to compress ML models and reduce costs: Model Compression: A Critical Step Towards Efficient Machine Learning.

All these resources will help you cultivate key skills that businesses and companies care about the most.

SPONSOR US

Get your product in front of 100,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email to ensure your product reaches this influential audience.

Keep prying.