Today, we are releasing another MCP demo, which is an MCP-driven voice Agent that queries a database and falls back to web search if needed.

Here’s the tech stack:

AssemblyAI for Speech‐to‐Text.

Firecrawl for web search.

Supabase for a database.

Livekit for orchestration.

Qwen3 as the LLM.

Let’s go!

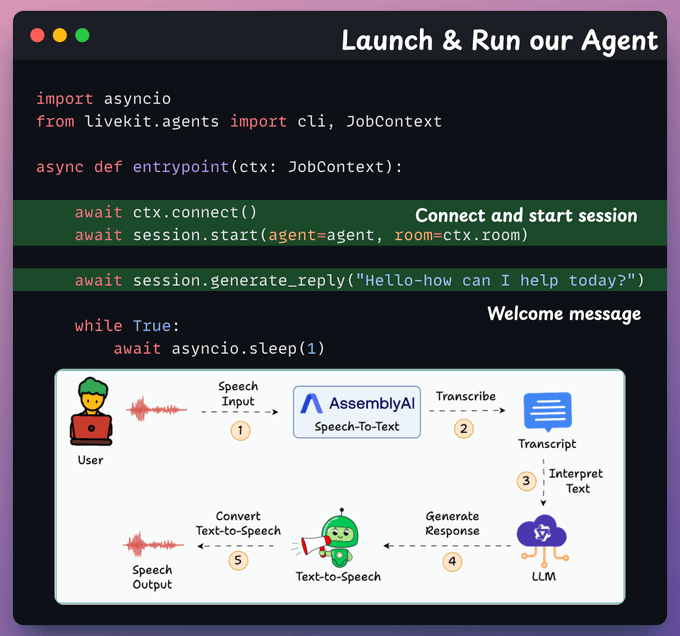

Here's our workflow:

The user's speech query is transcribed to text with AssemblyAI.

Agent discovers DB & web tools.

LLM invokes the right tool, fetches data & generates a response.

The app delivers the response via text-to-speech.

Let’s implement this!

Initialize Firecrawl & Supabase

We instantiate Firecrawl to enable web searches and start our MCP server to expose Supabase tools to our Agent.

Define web search tool

We fetch live web search results using Firecrawl search endpoint. This gives our agent up-to-date online information.

Get Supabase MCP Tools

We list our Supabase tools via the MCP server and wrap each of them as LiveKit tools for our Agent.

Build the Agent

We set up our Agent with instructions on how to handle user queries.

We also give it access to the Firecrawl web search and Supabase tools defined earlier.

Configure Speech-to-Response flow

We transcribe user speech with AssemblyAI Speech-to-Text.

Qwen 3 LLM, served locally with Ollama, invokes the right tool.

A voice output is generated via TTS.

Launch the Agent

We connect to LiveKit, start our session with a greeting, then continuously listen and respond until the user stops.

Done! Our MCP-powered Voice Agent is ready.

If the query is related to a database, it queries Supabase via MCP tools.

Otherwise, it performs a web search via Firecrawl.

You can find the code in this GitHub repo →

Thanks for reading!