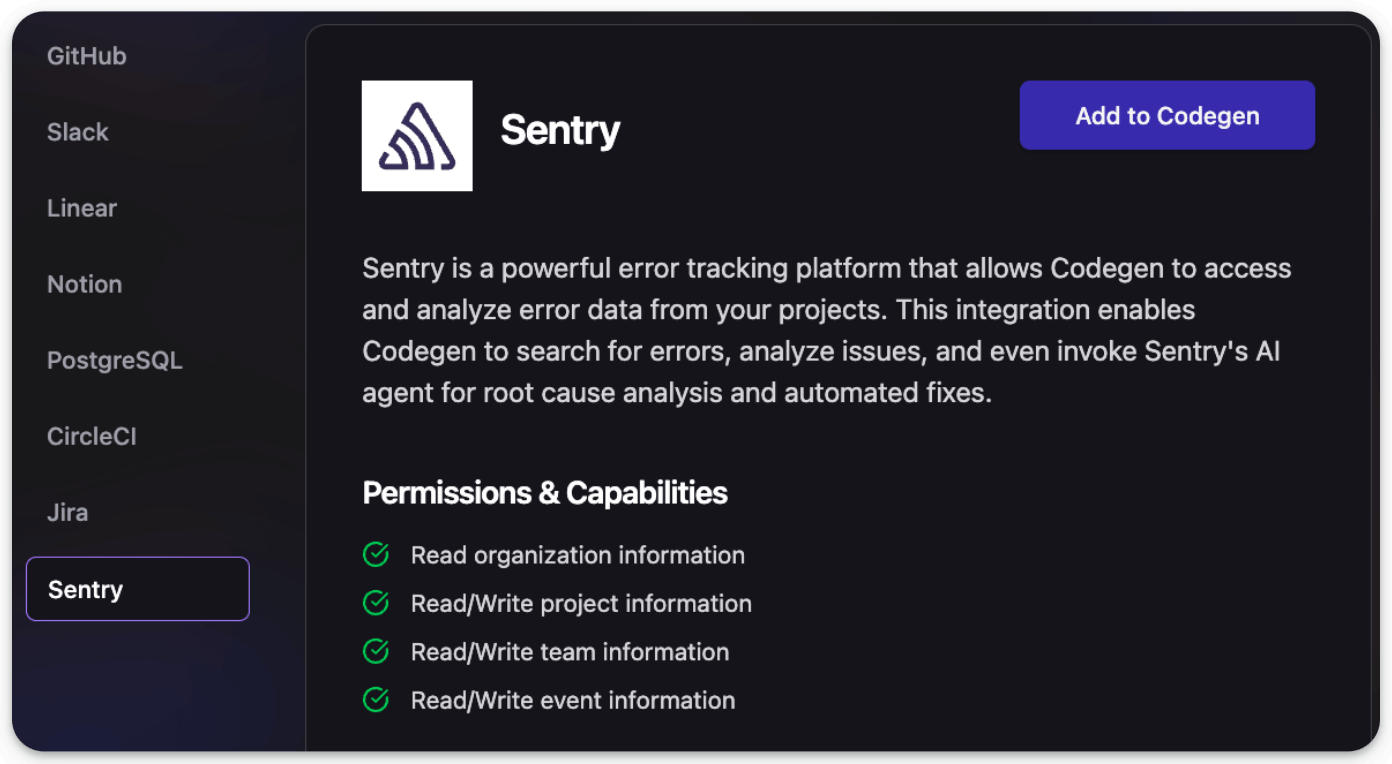

Solve Sentry errors with Codegen coding Agents

Sentry is a powerful application monitoring software.

Codegen now connects to Sentry to access & analyze error data from projects.

This integration enables it to search for errors, analyze issues, and even invoke coding agents for root cause analysis and automated fixes.

Thanks to Codegen for partnering today!

Build an Ultimate AI Assistant using 6 MCP servers

We have easily tested 100+ MCP servers in the last 3-4 months!

Today, let’s use the best 6 to build an ultimate AI assistant.

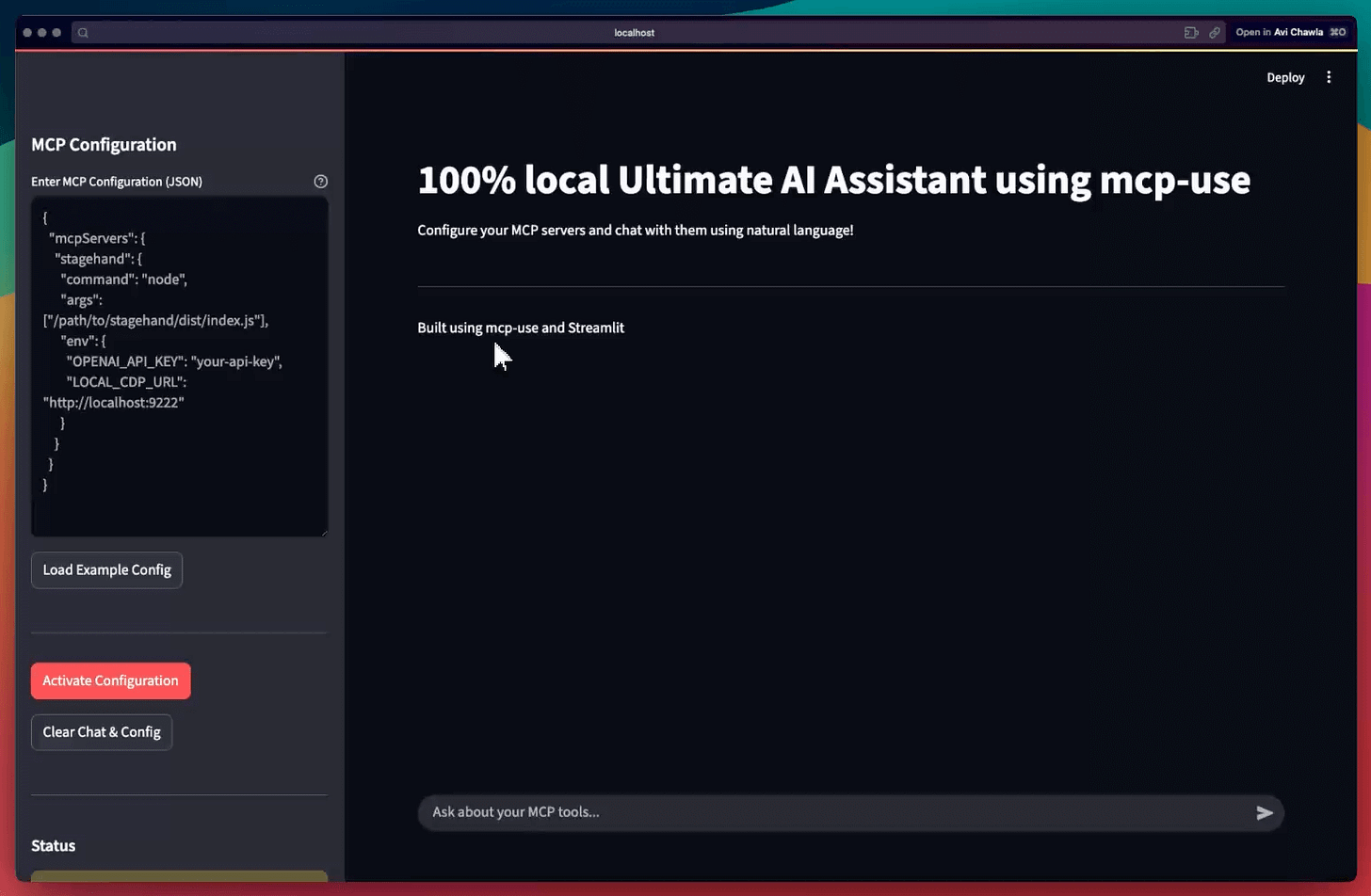

It will be powered by a fully local MCP client.

Tech stack:

mcp-use to connect LLM to MCP servers

Stagehand MCP for browser access

Firecrawl MCP for scraping

Ragie MCP for multimodal RAG

Graphiti MCP as memory

Terminal & GitIngest MCP

Let's dive in!

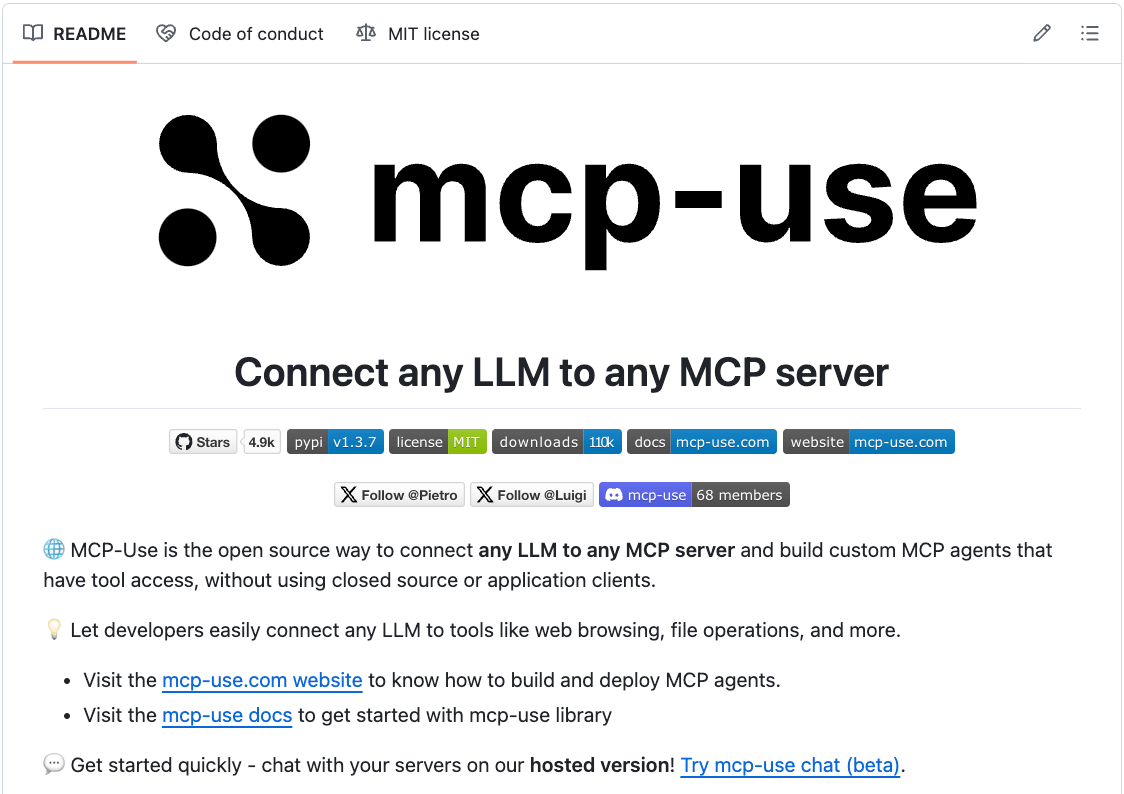

0) mcp-use

mcp-use is an open-source framework that lets you connect any LLM to any MCP server and build custom MCP Agents in 3 simple steps:

Define the MCP server config.

Build an Agent using the LLM & MCP client.

Invoke the Agent.

1) Stagehand MCP server

We begin by allowing our Agent to control a browser, navigate web pages, take screenshots, etc., using Stagehand MCP.

Below, we asked a weather query, and the Agent autonomously responded to it by initiating a browser session.

2) Firecrawl MCP server

Next, we add scraping, crawling & deep research capabilities to the Agent.

mcp-use supports connecting to multiple MCP servers simultaneously. So we add the Firecrawl MCP config to the existing config & interact with it.

3) Graphiti MCP server

So far, our Agent is memoryless. It forgets everything after each task.

This MCP allows it to build & query temporally-aware knowledge graphs that act as its memory.

Below, we provided some dev info, which is visible in the Neo4j DB.

4) Ragie MCP server

Next, we provide multimodal RAG capabilities to the Agent from a complex knowledge base consisting of texts, images, videos, audios, docs, etc.

Below, we asked it to list projects in our MCP PDF (a complex doc), and it responded perfectly.

5) GitIngest MCP server

Next, to address developer needs, we allow our Agent to chat with any GitHub repo.

Below, we asked about the tech stack of our book writer flow by providing the repo link. It extracted the right info by using the MCP server.

6) Terminal MCP server

Finally, we give our Agent terminal control to execute commands for the developer if needed.

It provides tools like:

read/write/search/move files

execute a command

create/list directory, etc.

Lastly, we wrap this in a Streamlit interface, where we can dynamically change the MCP config.

This gives us a 100% local ultimate AI assistant that can browse, scrape, has memory, retrieve from a multimodal knowledge base, and much more.

We used mcp-use since it is the easiest way to connect LLMs to MCP servers & build local MCP clients.

Compatible with Ollama & LangChain

Stream Agent's output async

Built-in debugging mode

Restrict MCP tools

GitHub Repo: https://github.com/mcp-use/mcp-use (don't forget to star)

Find the code for today’s build here →

Thanks for reading!