Vanilla RAG works as long as your external docs. look like the image on the left, but real-world documents are like the image on the right:

Today, let’s build an enterprise-grade agentic RAG over such complex real-world docs.

Here’s our tech stack:

CrewAI for agent orchestration.

EyelevelAI’s GroundX for SOTA document parsing.

We’ll do RAG over MIG 29 (a fighter aircraft) flight manual, which includes complex figures, diagrams, and more.

This diagram illustrates the key components & how they interact with each other!

Let’s build it!

Setup the LLM

CrewAI nicely integrates with all the popular LLMs and providers out there!

Here's how we setup a local DeepSeek-R1:7B:

Setup tools

This is the core of our application. We integrate EyelevelAI’s Python SDK as a custom tool with CrewAI.

Define Retriever Agent

The retriever agent is responsible for retrieving the right context for the user query and is assigned a task. Here's how it's done:

Define Response Gen Agent

The Response Gen Agent takes the query and context provided by the retriever agent and generates a response.

Setup Crew

Once we have the agents and their tasks defined, we put them in a crew that is orchestrated using CrewAI.

Kickoff and results

Finally, we provide the user query and kick off the crew!

And it works as it should, even on a complex document, which is impressive, isn’t it?

You can test EyeLevel’s SOTA document parsing on your own complex document here →

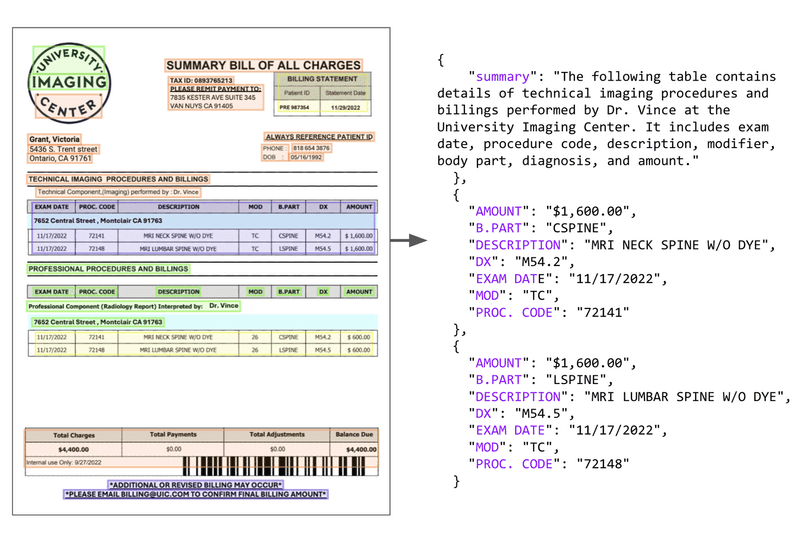

They are developing powerful parsing systems that can intuitively chunk relevant content and understand what’s inside each chunk, whether it's text, images, or diagrams, as shown below:

As depicted above, the system takes an unstructured (text, tables, images, flow charts) input and parses it into a JSON format that LLMs can easily process to build RAGs over.

Try EyeLevel to build real-world robust RAG systems:

Find the code for this demo here: Agentic RAG with EyeLevel and CrewAI.

Thanks to EyeLevel for showing us their powerful RAG solution and partnering on today’s issue.

Thanks for reading!