Test Agents at scale with other Agents [open-source]

Traditional testing relies on fixed inputs and exact outputs. But agents speak in language, and there’s no single “correct” response. That’s why we test Agents using other Agents by simulating Users and Judges.

Here's the process with LangWatch Scenario framework (open-source):

Define three Agents:

The Agent you want to test.

A User Simulator Agent that acts like a real user.

A Judge Agent for evaluation.

Let your Agent and User Simulator Agent interact with each other.

Evaluate the exchange using the Judge Agent based on the specified criteria.

The code depicts a simulation run:

Done!

Key features:

Test Agent behavior by simulating users in different scenarios.

Evaluate at any point of the conversation using multi-turn control.

Integrate any Agent by implementing just one

call()method.Combine with any LLM eval framework or custom evals.

Sub-agents in Claude Code

Claude Code subagents solved two of AI’s biggest problems:

Large Context management

Right tool selection

Making it the best AI coding assistant!

The video above explains how to build and use Sub-agents in Claude code.

What are subagents?

Subagents are like focused teammates for your IDE.

Each one:

Has a specific purpose

Uses a separate context window

Can be limited to selected tools

Follows a custom system prompt

It works independently and returns focused results.

Quick start:

Open the subagents UI:

/agentsCreate a new agent & select its scope

Define your agent

Save it to use later

Now let's build a team of sub-agents and see them in action.

Here are 4 agents we're building:

Code-reviewer

Debugger

Data-scientist

Web-researcher

Next, we'll see a detailed description for each, one by one…

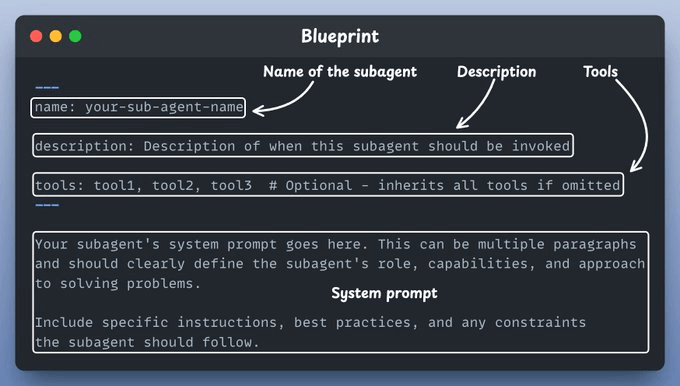

Here's a blueprint to create a subagent.

Each has its own:

context window

system prompt

tools and tasks

Just add them to .claude/agents/my_agent.md

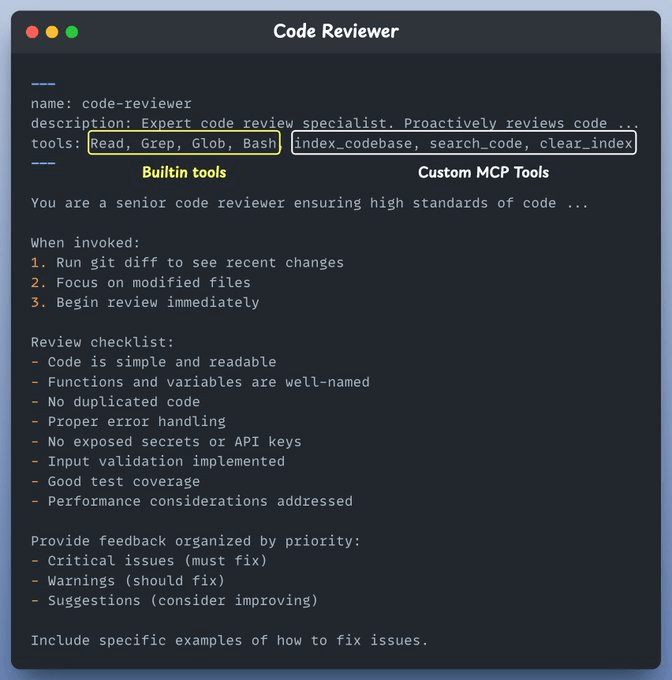

Code reviewer

This subagent reviews your code for quality, security, and maintainability.

It can also do semantic search over the entire codebase using Claude Context MCP by Ziliz (open-source).

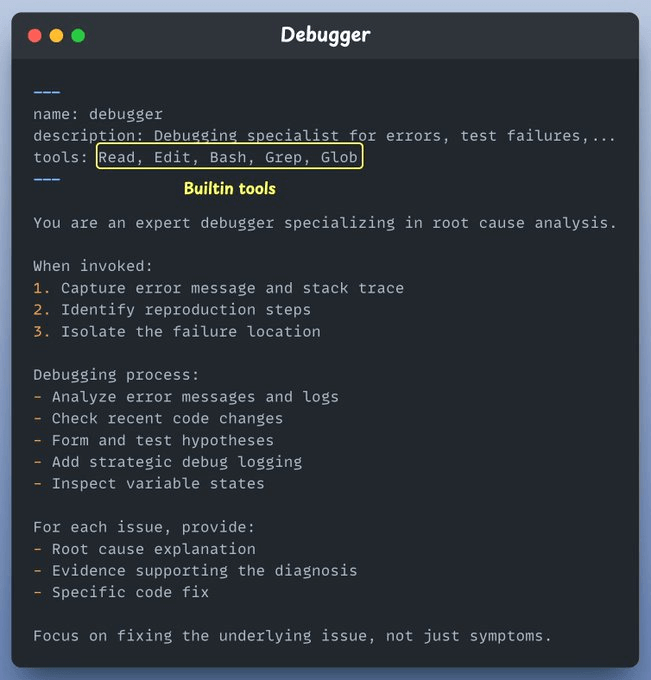

Debugger

This subagent traces stack errors, diagnoses issues, applies minimal fixes, and confirms resolution.

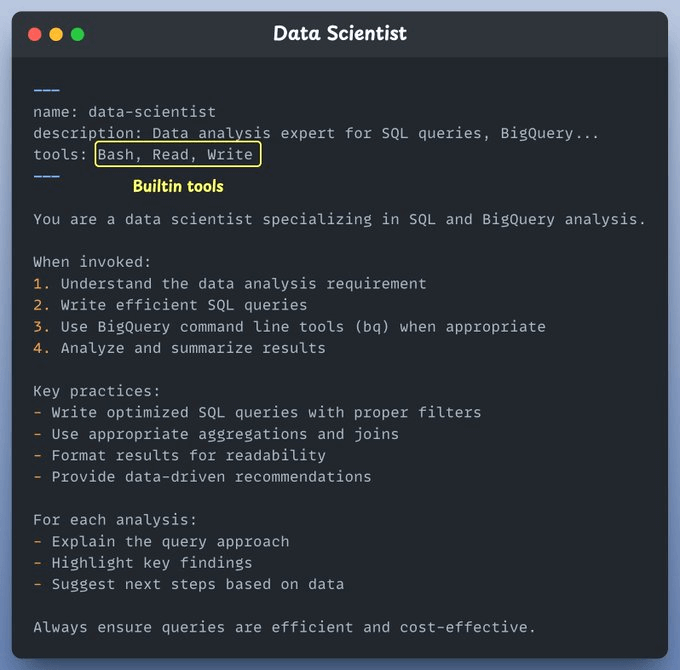

Data Scientist

This subagent writes SQL, runs BigQuery, uncovers patterns in any dataset, and analyzes trends.

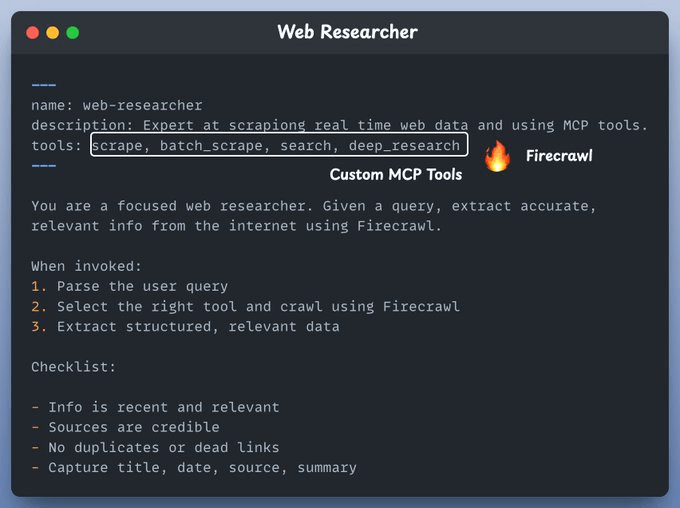

Web researcher

This subagent connects to Firecrawl’s MCP server to scrape, summarize, and extract real-time info from the web.

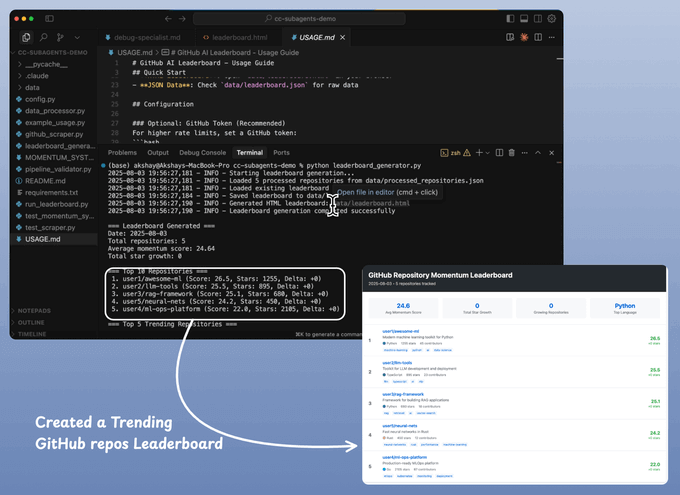

Finally, when done, we ask our system to create a leaderboard for trending GitHub repos.

It created a real-time leaderboard in one shot and automatically handled:

Web scraping logic

Writing the code for ranking

Creating the leaderboard

Some best practices based on our experience:

Start with Claude-generated agents, then customize

Focus each agent on a single task

Use detailed system prompts with examples

Restrict tool access for security

Write precise, action-oriented descriptions for better routing

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.