Meta released Llama-3.3 yesterday.

So we thought of releasing a practical and hands-on demo of using Llama 3.3 to build a RAG app.

The final outcome is shown in the video above.

The app accepts a document and lets the user interact with it via chat.

We’ll use:

LlamaIndex for orchestration.

Qdrant to self-host a vector database.

Ollama for locally serving Llama-3.3.

The code is available in this Studio: Llama 3.3 RAG app code. You can run it without any installations by reproducing our environment below:

Let’s build it!

Workflow

The workflow is shown in the animation below:

Implementation

Next, let’s start implementing it.

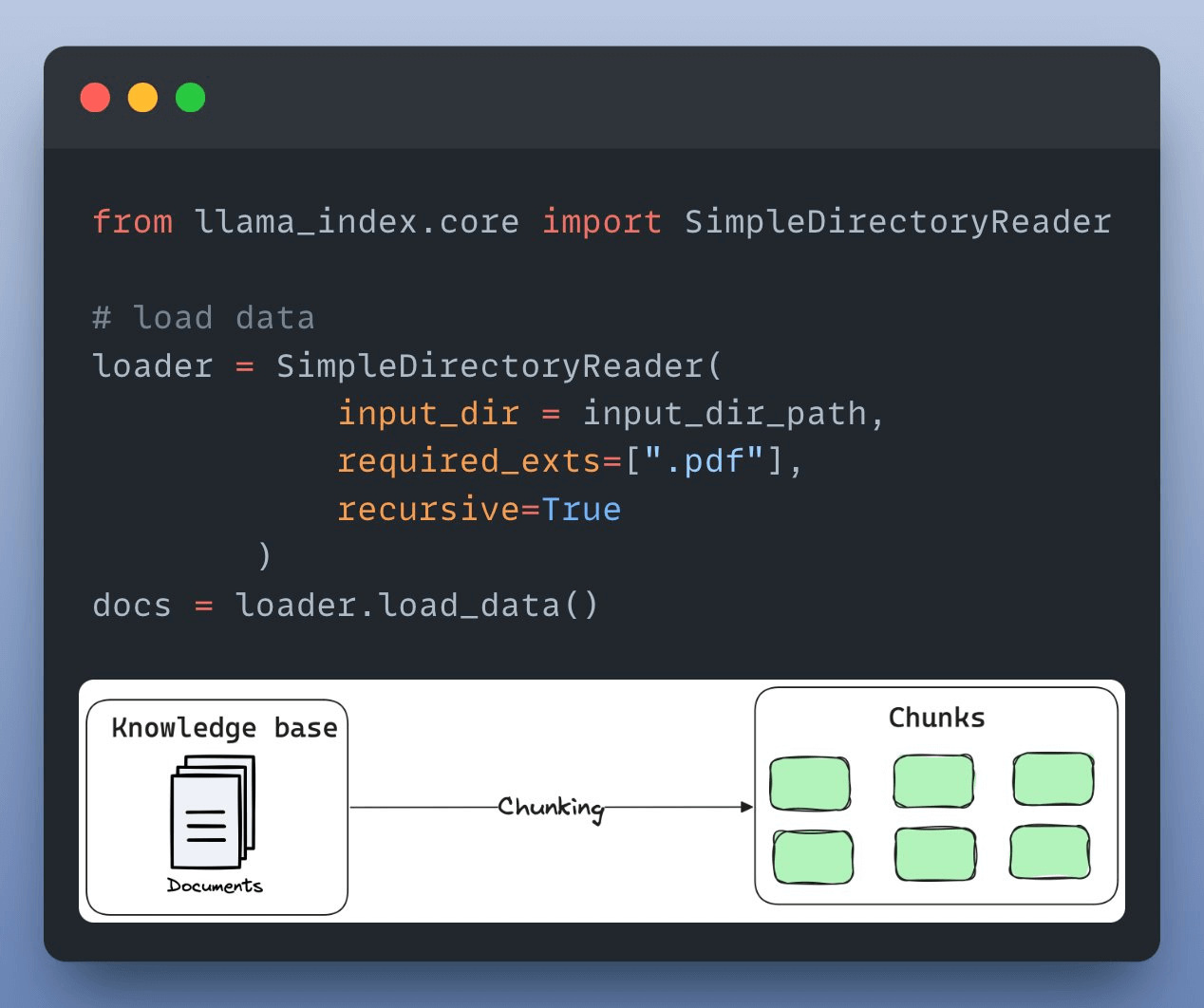

First, we load and parse the external knowledge base, which is a document stored in a directory, using LlamaIndex:

Next, we define an embedding model, which will create embeddings for the document chunks and user queries:

After creating the embeddings, the next task is to index and store them in a vector database. We’ll use a self-hosted Qdrant vector database for this as follows:

Next up, we define a custom prompt template to refine the response from LLM & include the context as well:

Almost done!

Finally, we set up a query engine that accepts a query string and uses it to fetch relevant context.

It then sends the context and the query as a prompt to the LLM to generate a final response.

This is implemented below:

Done!

There’s some streamlit part we have shown here, but after building it, we get this clear and neat interface:

Wasn’t that easy and straightforward?

The code is available in this Studio: Llama 3.3 RAG app code. You can run it without any installations by reproducing our environment below:

👉 Over to you: What other demos would you like to see with Llama3.3?

Thanks for reading, and we'll see you next week!