Web scraping in pure English with Firecrawl Extract

Using the Firecrawl Extract endpoint, just describe what you want to extract in a prompt. This produces LLM-ready structured output. No more hard coding!

FireCrawl also generates code snippets to run the same job programmatically.

Thanks to FireCrawl for showing us their powerful scraping capabilities and partnering today!

MultiModal RAG with Qwen2.5-VL

Recently, the Qwen team at Alibaba Cloud released the Qwen2.5-VL series, a multimodal LLM, outperforming most powerful LLMs:

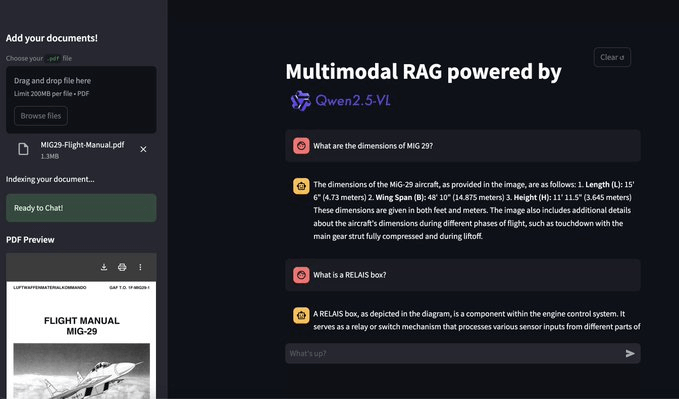

Today, using this model, we’ll do multimodal RAG over the MIG 29 (a fighter aircraft) flight manual, which includes complex figures, diagrams, and more.

It has several complex diagrams, text within visualizations, and tables—perfect for multimodal RAG.

We’ll use:

Colpali to understand and embed docs using vision capabilities.

Qdrant as the vector database.

Qwen2.5-VL-3B multimodal LLM to generate a response.

The video at the top shows the final outcome.

The code is available in this Studio: Multimodal RAG with Qwen 2.5-VL. You can run it without any installations by reproducing our environment below:

Let's build it!

1) Embed data

We extract each document page as an image and embed it using ColPali.

We did a full architectural breakdown of ColPali in Part 9 of the RAG crash course and also optimized it with binary quantization.

ColPali uses vision capabilities to understand the context. It produces patches for every page, and each patch gets an embedding vector.

This is implemented below:

2) Vector database

Embeddings are ready. Next, we create a Qdrant vector database and store these embeddings in it, as demonstrated below:

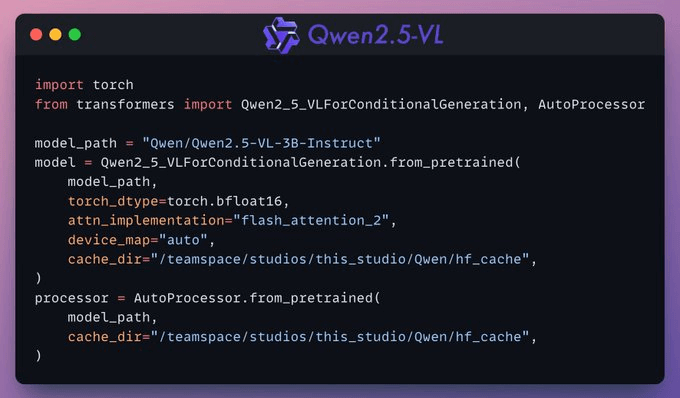

3) Set up Qwen2.5-VL locally

Next, we set up Qwen2.5-VL by downloading it from HuggingFace.

4) Query vector database and generate a response

Next, we:

Query the vector database to get the most relevant pages.

Pass the pages (as images) along with the query to Qwen2.5-VL to generate the response.

Done!

We have implemented a 100% local Multimodal RAG powered by Qwen2.5-VL.

There’s some streamlit part we have shown here, but after building it, we get this clear and neat interface.

In this example, it produces the right response by retrieving the correct page and understanding a complex visualization👇

Wasn’t that easy and straightforward?

The code is available in this Studio: Multimodal RAG with Qwen 2.5-VL. You can run it without any installations by reproducing our environment below:

👉 Over to you: What other demos would you like to see with Qwen 2.5-VL?

Thanks for reading!