ChatGPT has a deep research feature. It helps you get detailed insights on any topic.

Today, let us show you how you can build a 100% local alternative to it.

The video above gives you a quick demo of what we are building today and a complete walkthrough!

Tech stack:

Linkup for deep web research.

CrewAI for multi-agent orchestration.

Ollama to locally serve DeepSeek-R1.

Cursor as the MCP host..

Here’s the system overview:

User submits a query.

Web search agent runs deep web search via Linkup.

Research analyst verifies and deduplicates results.

Technical writer crafts a coherent response with citations.

The code repository is linked later in the issue.

Implementation

Now let’s implement it!

Setup LLM

We'll use a locally served DeepSeek-R1 using Ollama.

Define Web Search Tool

We'll use Linkup’s powerful search capabilities, which rival Perplexity and OpenAI, to power our web search agent.

This is done by defining a custom tool that our agent can use.

Define Web Search Agent

The web search agent gathers up-to-date information from the internet based on user query.

The linkup tool we defined earlier is used by this agent.

Define Research Analyst Agent

This agent transforms raw web search results into structured insights, with source URLs.

It can also delegate tasks back to the web search agent for verification and fact-checking.

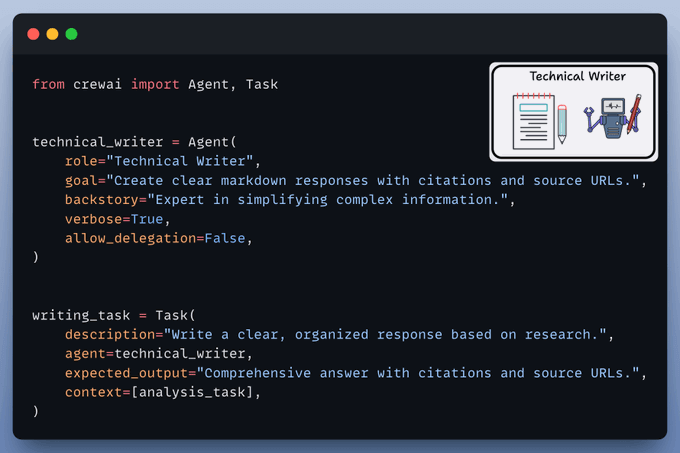

Define Technical Writer Agent

It takes the analyzed and verified results from the analyst agent and drafts a coherent response with citations for the end user.

Setup Crew

Finally, once we have all the agents and tools defined, we set up and kick off our deep researcher crew.

Create MCP Server

Now, we'll encapsulate our deep research team within an MCP tool. With just a few lines of code, our MCP server will be ready.

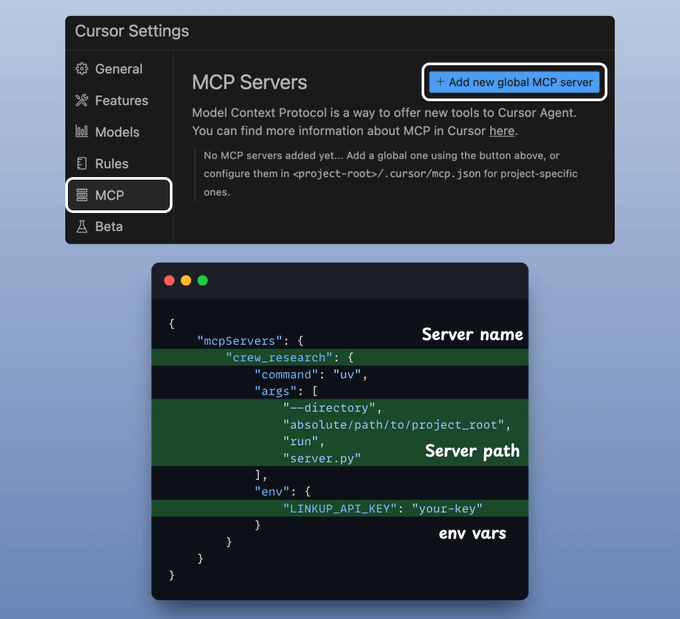

Here’s how to connect it with Cursor:

Integrate MCP server with Cursor

Go to: File → Preferences → Cursor Settings → MCP → Add new global MCP server

In the JSON file, add what's shown below:

Done! Your deep research MCP server is live and connected to Cursor (you can also connect it to Claude Desktop if you’d like).

To use Deep Researcher independently, we have also created a nice Streamlit UI where you can see a deep research on “small language models”:

And that’s your MCP-powered 100% local deep researcher!

Find the code in this GitHub repo → (don’t forget to star the repo)

👉 Over to you: What other demos would you like to see?

Thanks for reading!