Last week, we talked about ModernBERT, which is an upgraded version of BERT, with:

16x larger sequence length.

Much better downstream performance, both for classification tasks and retrieval (like used in RAG systems)

The most memory-efficient encoder.

Today, we used it to build a RAG app to chat with documents, and the demo is shown above.

The app accepts a document and lets the user chat with it.

Here’s our tool stack:

Embeddings → ModernBERT from Nomic-ai, which builds lightweight tools that enable everyone to interact with AI scale datasets and run AI models on consumer computers.

LLM → Ollama to serve the LLM locally.

Orchestration → LlamaIndex for building the RAG app.

UI → Streamlit.

On a side note, we started a beginner-friendly crash course on RAGs recently with implementations, which covers:

Foundations of RAG

RAG evaluation

RAG optimization

Building blocks of Multimodal RAG (CLIP, multimodal prompting, etc.)

Building multimodal RAG systems.

Graph RAG

Improving rerankers in RAG.

Let’s build the RAG app now!

Implementation

Next, let’s start implementing it.

Note: Since the ModernBERT is new, it requires transformers to be installed from the (stable) main branch of the transformers repository.

We have detailed the steps to setup the project in our GitHub repository here: Llama 3.2 RAG using ModernBERT.

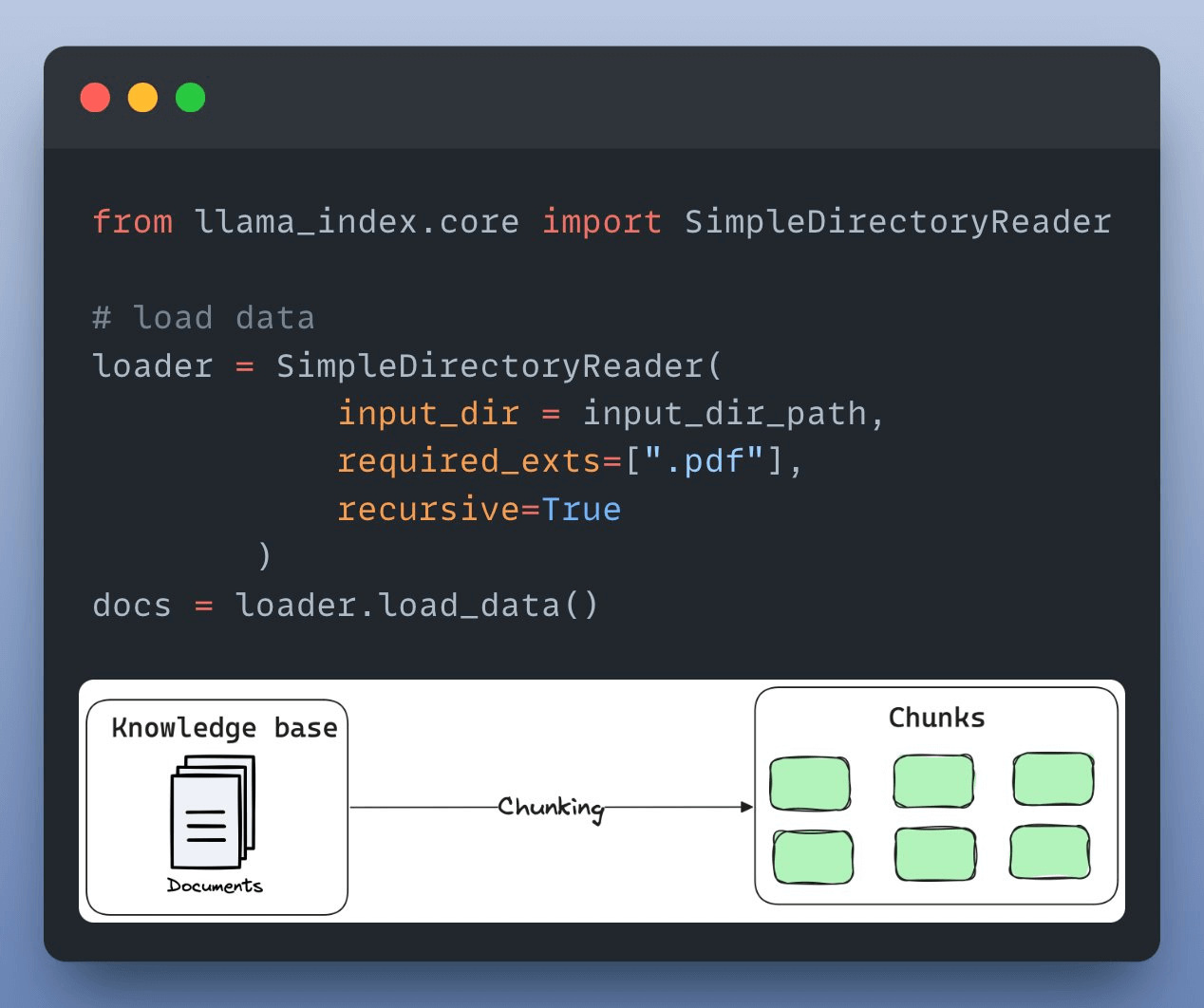

First, we load and parse the external knowledge base, which is a document stored in a directory, using LlamaIndex:

Next, we define an embedding model, which will create embeddings for the document chunks and user queries:

After creating the embeddings, the next task is to index and store them in a vector database. We’ll use a self-hosted Qdrant vector database for this as follows:

Next up, we define a custom prompt template to refine the response from LLM & include the context as well:

Almost done!

Finally, we set up a query engine that accepts a query string and uses it to fetch relevant context.

It then sends the context and the query as a prompt to the LLM to generate a final response.

This is implemented below:

Done!

There’s some streamlit part we have shown here, but after building it, we get this clear and neat interface:

Wasn’t that easy and straightforward?

The code to run this app is available in our GitHub repository here: Llama 3.2 RAG using ModernBERT.

👉 Over to you: What other demos would you like to see with ModernBERT?

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

Learn sophisticated graph architectures and how to train them on graph data: A Crash Course on Graph Neural Networks – Part 1.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here: Bi-encoders and Cross-encoders for Sentence Pair Similarity Scoring – Part 1.

Learn techniques to run large models on small devices: Quantization: Optimize ML Models to Run Them on Tiny Hardware.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Learn how to identify causal relationships and answer business questions: A Crash Course on Causality – Part 1

Learn how to scale ML model training: A Practical Guide to Scaling ML Model Training.

Learn techniques to reliably roll out new models in production: 5 Must-Know Ways to Test ML Models in Production (Implementation Included)

Learn how to build privacy-first ML systems: Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

Learn how to compress ML models and reduce costs: Model Compression: A Critical Step Towards Efficient Machine Learning.

All these resources will help you cultivate key skills that businesses and companies care about the most.