We built a mini-ChatGPT app that runs locally on your computer.

It is powered by the open-source Llama3.2-vision model.

Before we show you how we built it, the above video shows a demo.

You can chat with it just like you would chat with ChatGPT and provide multimodal prompts.

Here’s what we used:

Ollama for serving the open-source Llama3.2-vision model locally.

Chainlit, which is an open-source tool that lets you build production-ready conversational AI apps in minutes.

The code is available on GitHub here: Local ChatGPT.

Let's build it.

We'll assume you are familiar with multimodal prompting. If you are not, we covered in Part 5 of our RAG crash course (open-access).

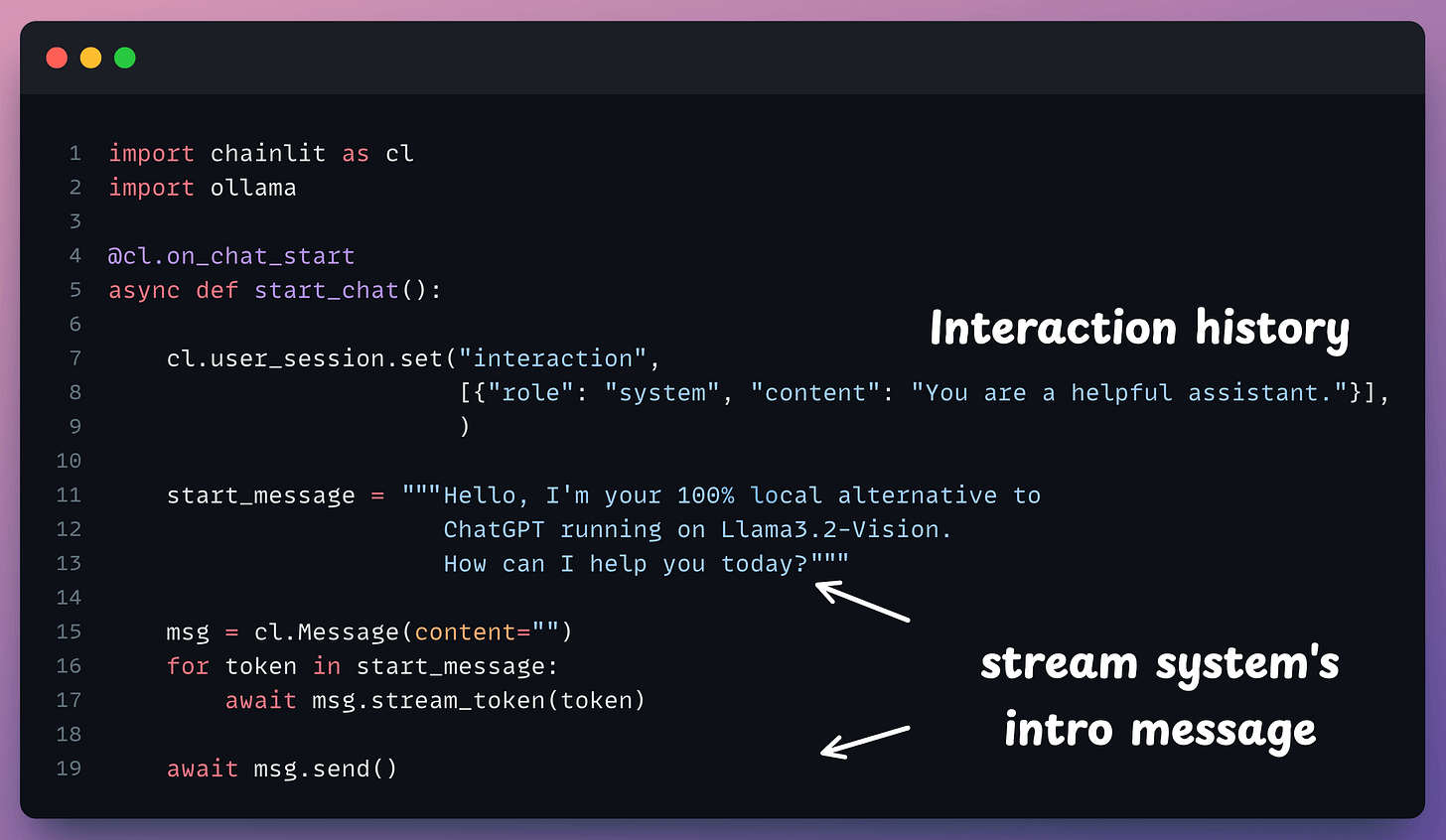

We begin with the import statements and define the start_chat method, which is invoked as soon as a new chat session starts:

We use the @cl.on_chat_start decorator in the above method.

Next, we define another method which will be invoked to generate a response from the LLM:

The user inputs a prompt.

We add it to the interaction history.

We generate a response from the LLM.

We store the LLM response in the interaction history.

Finally, we define the main method:

Done!

Run the app as follows:

This launches the app shown at the top.

The code is available on GitHub here: Local ChatGPT.

We launched this repo recently, wherein we’ll publish the code for such hands-on AI engineering newsletter issues.

This repository will be dedicated to:

In-depth tutorials on LLMs and RAGs.

Real-world AI agent applications.

Examples to implement, adapt, and scale in your projects.

Find it here: AI Engineering Hub (and do star it).

👉 Over to you: What more functionalities would you like to see in the above app?

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

Learn sophisticated graph architectures and how to train them on graph data: A Crash Course on Graph Neural Networks – Part 1.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here: Bi-encoders and Cross-encoders for Sentence Pair Similarity Scoring – Part 1.

Learn techniques to run large models on small devices: Quantization: Optimize ML Models to Run Them on Tiny Hardware.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Learn how to identify causal relationships and answer business questions: A Crash Course on Causality – Part 1

Learn how to scale ML model training: A Practical Guide to Scaling ML Model Training.

Learn techniques to reliably roll out new models in production: 5 Must-Know Ways to Test ML Models in Production (Implementation Included)

Learn how to build privacy-first ML systems: Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

Learn how to compress ML models and reduce costs: Model Compression: A Critical Step Towards Efficient Machine Learning.

All these resources will help you cultivate key skills that businesses and companies care about the most.