Pytest for LLM Apps [100% open-source]!

DeepEval turns LLM evaluation into a two-line test suite, helping you identify the best models, prompts, and architecture for your AI workflow.

Works with any framework, including LlamaIndex, Langchain, CrewAI, and more.

100% open-source, runs locally.

GitHub repo → (don’t forget to star it)

Thanks to DeepEval for partnering today!

Building an MCP-powered Financial Analyst

We just put together another MCP demo. It is a financial analyst that connects to your Cursor/Claude and answers finance-related queries.

The video above depicts a quick demo of what we're building!

Tech stack:

CrewAI for multi-agent orchestration.

Ollama to locally serve DeepSeek-R1 LLM.

Cursor as the MCP host.

System overview:

The user submits a query.

The MCP agent kicks off the financial analyst crew.

The Crew conducts research and creates an executable script.

The agent runs the script to generate an analysis plot.

You can find the code in this GitHub repo →

Let's build it!

Setup LLM

We will use Deepseek-R1 as the LLM, served locally using Ollama.

Let’s set up the Crew now

Query parser Agent

This agent accepts a natural language query and extracts structured output using Pydantic.

This guarantees clean and structured inputs for further processing!

Code Writer Agent

This agent writes Python code to visualize stock data using Pandas, Matplotlib, and Yahoo Finance libraries.

Code Executor Agent

This agent reviews and executes the generated Python code for stock data visualization.

It uses the code interpreter tool by CrewAI to execute the code in a secure sandbox environment.

Setup Crew and Kickoff

Once we have our agents and their tasks defined, we set up and kick off our financial analysis crew to get the result shown below!

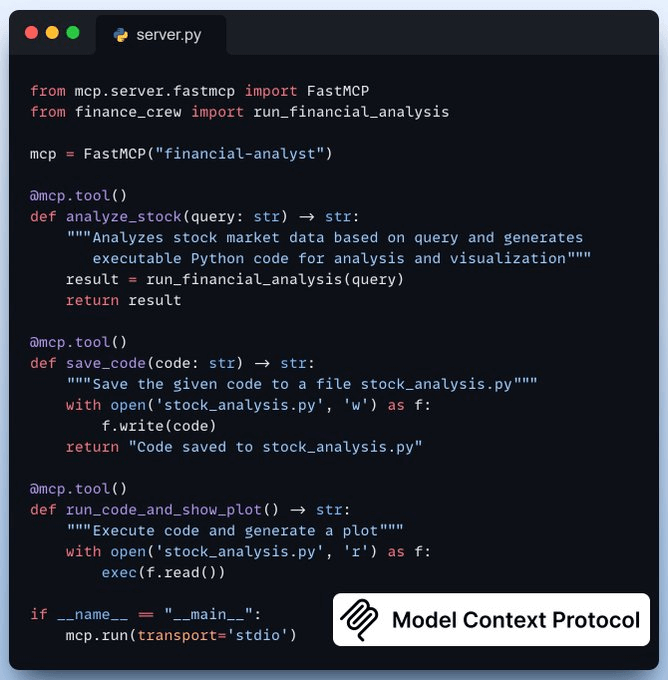

Create MCP Server

Now, we encapsulate our financial analyst within an MCP tool and add two more tools to enhance the user experience.

save_code-> Saves generated code to local directoryrun_code_and_show_plot-> Executes the code and generates a plot

Integrate MCP server with Cursor

Go to: File → Preferences → Cursor Settings → MCP → Add new global MCP server.

In the JSON file, add what’s shown below 👇

Done! Our financial analyst MCP server is live and connected to Cursor!

You can chat with it about stock data, ask it to create plots, etc. The video at the top gives you a walk-through.

You can find the code in this GitHub repo →

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn how to build Agentic systems in an ongoing crash course with 13 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.