Recently, Alibaba released Qwen 3, the latest generation of LLMs in the Qwen series with dense and mixture-of-experts (MoE) models.

Here’s a tutorial on how you can fine-tune it using Unsloth.

The video above depicts inference using the HuggingFace transformers library on our fine-tuned model with and without thinking mode.

The code is available in this Studio: Fine-tuning Qwen 3 locally. You can run it without any installations by reproducing our environment below:

Let’s begin!

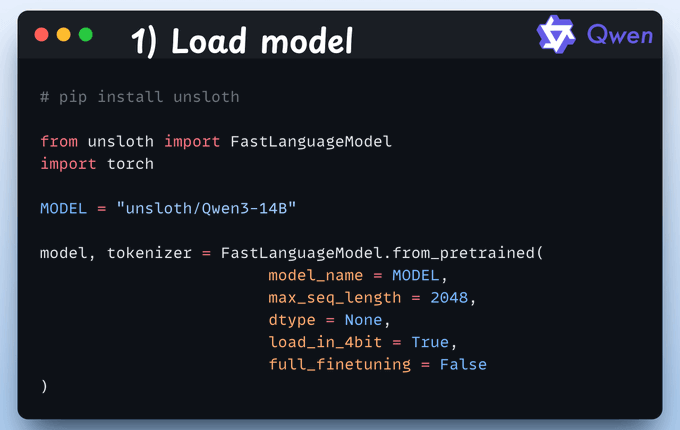

1) Load the model

We start by loading the Qwen 3 (14B variant) model and its tokenizer using Unsloth.

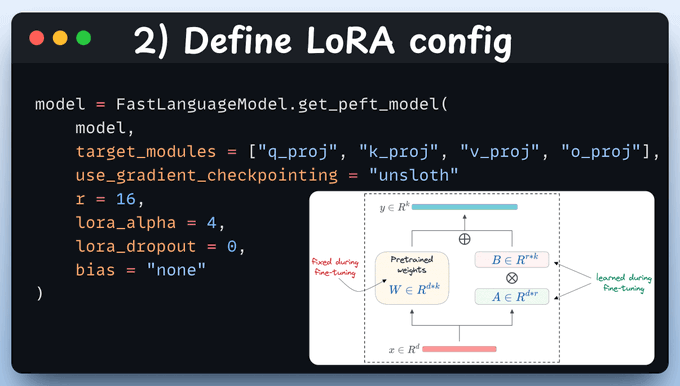

2) Define LoRA config

We'll use LoRA to avoid fine-tuning the entire model.

To do this, we use Unsloth's PEFT by specifying:

The model

LoRA low-rank (r)

Layers for fine-tuning, etc.

3-4) Load datasets

Next, we load a reasoning and non-reasoning dataset, over which we'll fine-tune our Qwen 3 model.

Check a sample from each of these dataset:

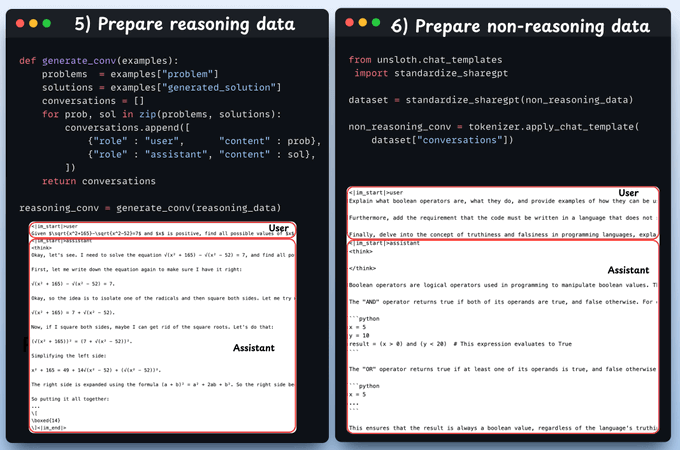

5-6) Prepare dataset

Before fine-tuning, we must prepare the dataset in a conversational format:

From the reasoning data, we select the solution and solution keys.

For non-reasoning data, we use a standardization method, which converts the data to the desired format.

Check the code below and a sample from each of these datasets:

Note: Use clear prompt-response pairs. Format the fine-tuning data so that each problem is presented in a consistent, model-friendly way. Typically, this means turning each math problem into an instruction or question prompt and providing a well-structured solution or answer as the completion. Consistency in formatting helps the model learn how to transition from question to answer.

Incorporate step-by-step solutions (Chain-of-Thought).

Mark the final answer clearly.

Ensure compatibility with evaluation benchmarks.

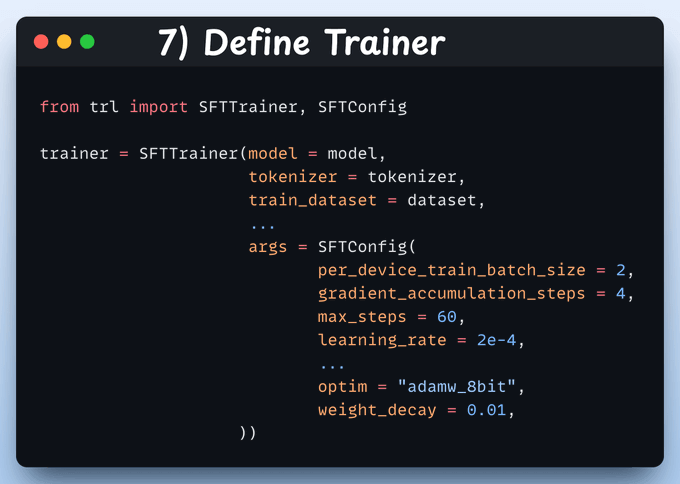

7) Define Trainer

Here, we create a Trainer object by specifying the training config, like learning rate, model, tokenizer, and more.

8) Train

With that done, we initiate training. The loss is decreasing with training, which means the model is being trained fine.

Check this code and output👇

9) Inference

Below, we run the model via the HuggingFace transformers library in a thinking and non-thinking mode. Thinking requires us to set the enable_thinking parameter to True.

With that, we have fine-tuned Qwen 3 completely locally!

The code is available in this Studio: Fine-tuning Qwen3 locally. You can run it without any installations by reproducing our environment below:

Thanks for reading!